Artificial Intelligence, particularly Generative AI, is rapidly evolving and becoming more accessible to everyday users. With large language models (LLMs) such as GPT and LLaMA making waves, the desire to run these models locally on personal hardware is growing. This article will provide a simple guide on setting up Ollama—a tool for running LLMs locally—on machines with and without a GPU. Additionally, it will cover deploying OpenWebUI using Podman to enable a local graphical interface for Gen AI interactions.

What is Ollama?

Ollama is a platform that allows users to run LLMs locally without relying on cloud-based services. It is designed to be user-friendly and supports a variety of models. By running models locally, users can ensure greater privacy, reduce latency, and maintain control over their data.

Setting Up Ollama on a Machine

Ollama can be run on machines with or without a dedicated GPU. The following will outline the general steps for both configurations.

1. Prerequisites

Before proceeding, ensure you have the following:

- A system running Fedora or a compatible Linux distribution

- Podman installed (for OpenWebUI deployment)

- Sufficient disk space for storing models

For machines with GPUs, you will also need:

- NVIDIA GPU with CUDA support (for faster performance)

- Properly installed and configured NVIDIA drivers

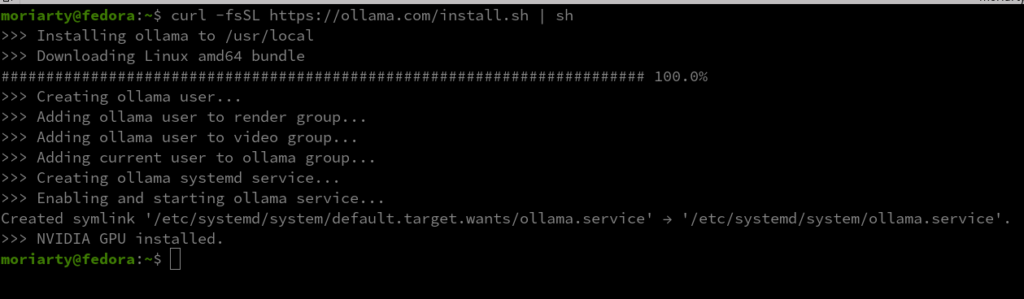

2. Installing Ollama

Ollama can be installed with a one-line command:

curl -fsSL https://ollama.com/install.sh | sh

Once installed, verify that Ollama is correctly set up by running:

ollama --version

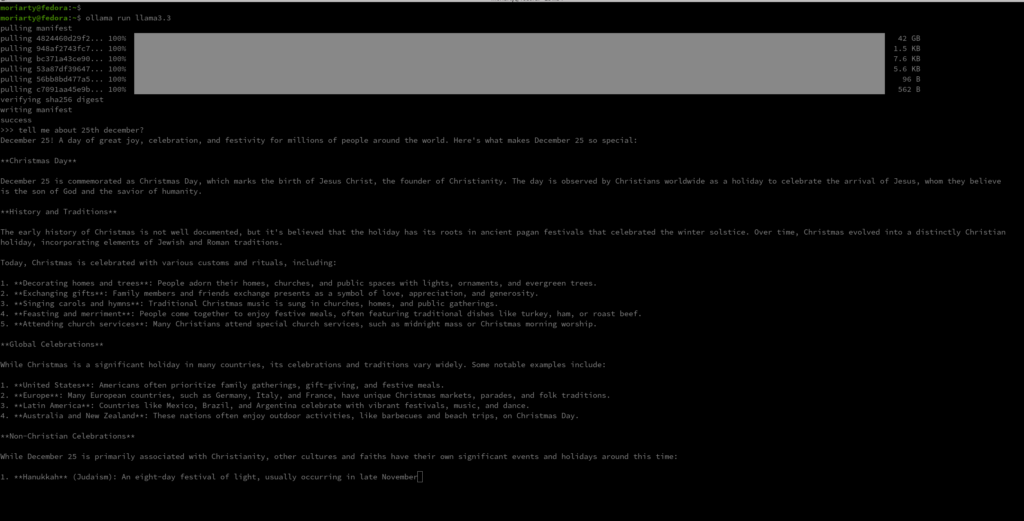

Running LLMs Locally

After setting up Ollama, you can download a preferred model and run it locally. Models can vary in size, so select one that fits your hardware’s capabilities. For example: I would personally use llama3.3 70B model. That is around 42GiB and might not fit everyone. There *is* a model for everyone, even for raspberrypis -YES. Please find one that you will be fit for your system here .

On machines without a GPU, Ollama will use CPU-based inference. While this is slower than GPU-based processing, it is still functional for basic tasks.

Once you are done, running ollama run <model_name> will work!

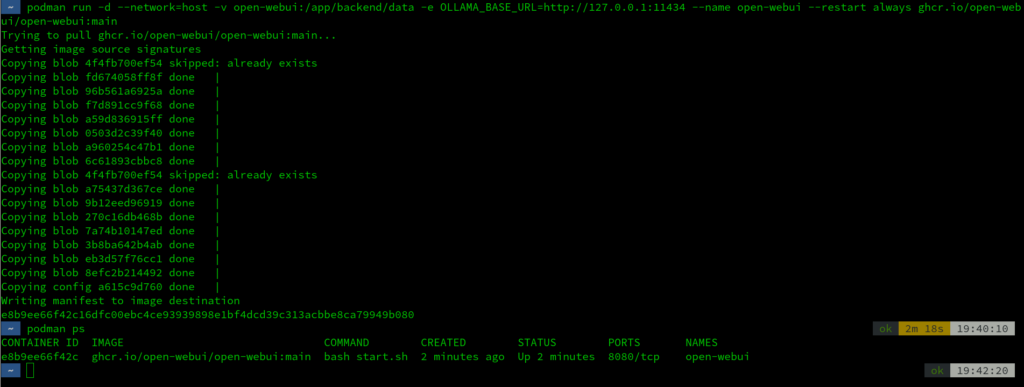

Deploying OpenWebUI with Podman

For users who prefer a graphical user interface (GUI) to interact with LLMs, OpenWebUI is a great option. The following command deploys OpenWebUI using Podman, ensuring a seamless local setup.

1. Downloading OpenWebUI Container

Start by pulling the OpenWebUI container image:

podman run -d --network=host -v open-webui:/app/backend/data -e OLLAMA_BASE_URL=http://127.0.0.1:11434 --name open-webui --restart always ghcr.io/open-webui/open-webui:main

2. Running the OpenWebUI Container

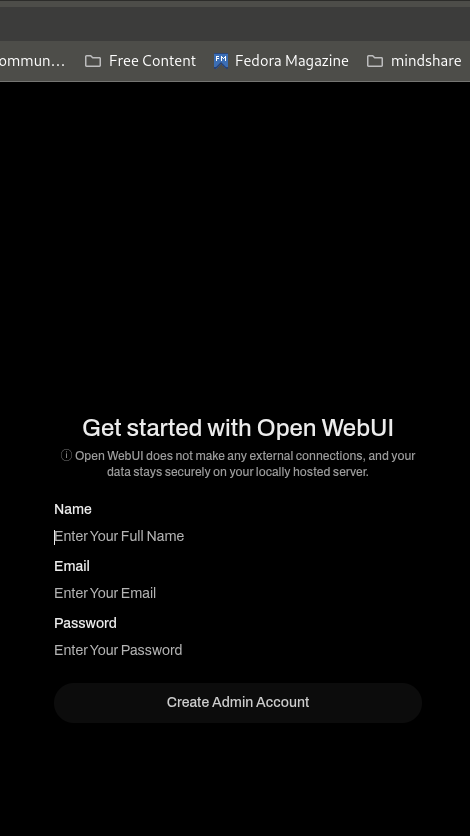

Once the image is downloaded, running ; ensure by running podman ps to list the existing containers. Access the OpenWebUI interface by opening your web browser and navigating to localhost:8080

Open WebUI will help you create one or multiple Admin and User accounts to query LLMs. The functionality is more like an RBAC—you can choose to customize which model can be queried by which User and what type of generated responses it “should” be giving. The features help set guardrails for users who intend to provision accounts for a specific purpose.

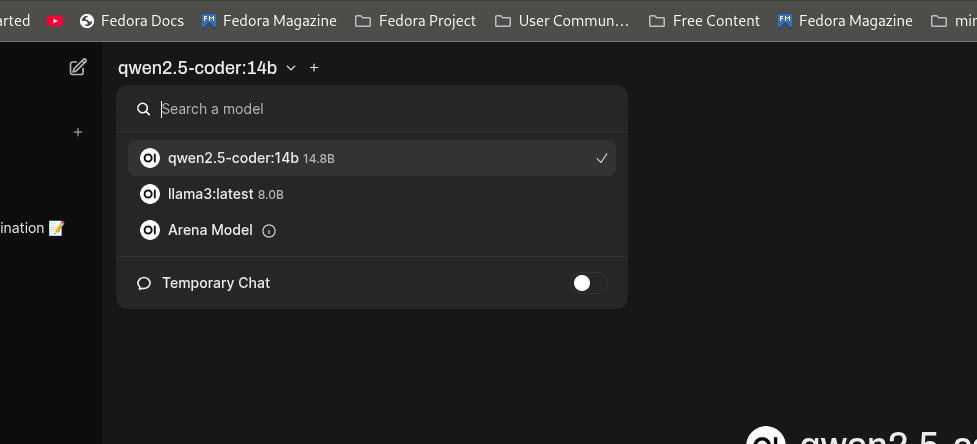

On the top-left, we should be able to select models. You can add more models by simply running ollama run <model_name> and then refreshing the localhost when the fetching is complete. It should let you add multiple models.

Interacting with the GUI

With OpenWebUI running, you can easily interact with LLMs through a browser-based GUI. This makes it simpler to input prompts, receive outputs, and fine-tune model settings.

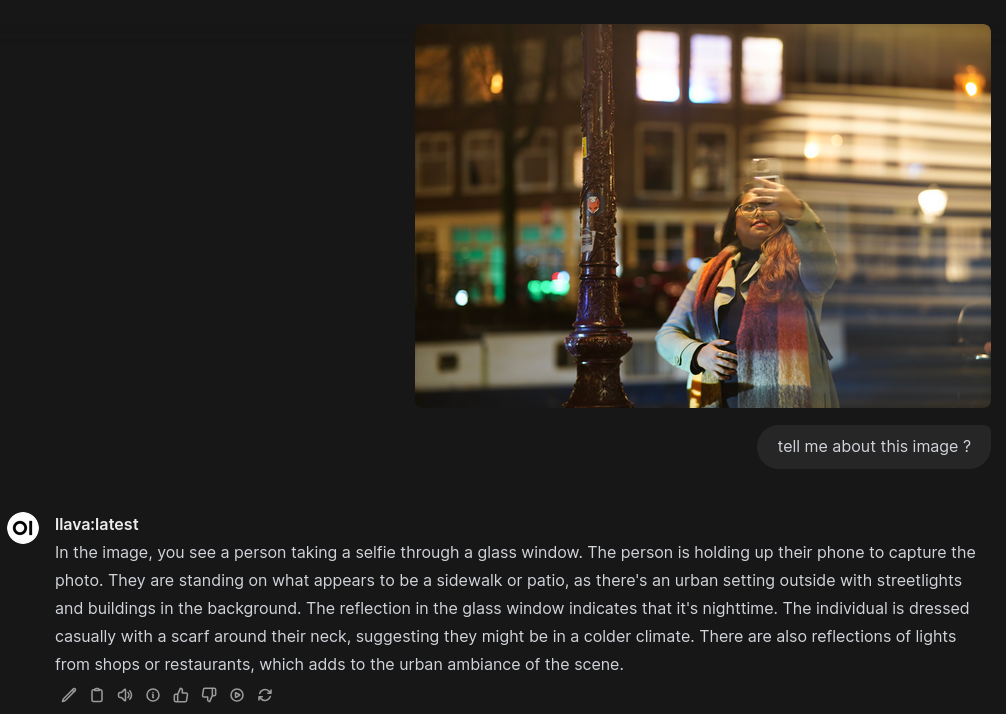

Using LLaVA for Image Analysis

LLaVA (Large Language and Vision Assistant) is an extension of language models that integrates vision capabilities. This allows users to upload images and query the model about them. Below are the steps to run LLaVA locally and perform image analysis.

1. Pulling the LLaVA Container

Start by pulling the LLaVA model: ollama pull llava

2. Running LLaVA and Uploading an Image

Once the model is download, access the LLaVA interface via web UI on the browser. Upload an image and input a query, such as:

LLaVA will analyze the image and generate a response based on its visual content.

Other Use Cases

In addition to image analysis, models like LLaVA can be used for:

- Optical Character Recognition (OCR): Extract text from images and scanned documents.

- Multimodal Interaction: Combine text and image inputs for richer AI interactions.

- Visual QA: Answer specific questions about images, such as identifying landmarks or objects.

Conclusion

By following this how-to, you can set up a local environment for running generative AI models using Ollama and OpenWebUI. Whether you have a high-end GPU or just a CPU-based system, this setup allows you to explore AI capabilities without relying on the cloud. Running LLMs locally ensures better privacy, control, and flexibility—key factors for developers and enthusiasts alike.

Happy experimenting!

M Jay

this is best, dense, great flow info. Great job !

gianluca

Cuda support dont work for the 41 (work on 38 with artifact)

The perfect setup to work with llm is on ubuntu/rh9.5 !!!

Jesus

Works with amd cards?

cjpembo

I installed the latest release of ROCm in Fedora 41 by following the official AMD install instructions (as close as possible). That said, it would be better to use a “supported” distro I suppose. I run LLMs within LM Studio and it definitely uses the card. Radeon 7900 XT

1stn00b

You can install Alpaca (https://jeffser.com/alpaca/) from Flathub (https://flathub.org/apps/com.jeffser.Alpaca) in seconds.

And since Alpaca is based on Ollama it also works on AMD GPUs that are basically plug&play on Linux.

Wonder why the author of the article is only mentioning Nvidia ?

-

Why not just use Alpaca? (on Gnome software)

vlad

Just build unraid from old pc and old nvidia gpu. You could install ollama and interface from there in couple clicks and one command in terminal. Plus you get uncomplicated zfs storage for couple extra hundreds.

Lem

I’m able to use an AMD gpu (RX6700XT) by using the docker install and creating a new line below [Service] with

Environment=”HSA_OVERRIDE_GFX_VERSION=10.3.0″

I run llama as a service so it starts on boot and has reasonable results.

load duration: 15.723579ms

prompt eval count: 37 token(s)

prompt eval duration: 6ms

prompt eval rate: 6166.67 tokens/s

eval count: 490 token(s)

eval duration: 3.874s

eval rate: 126.48 tokens/s

Red

nice, but i can´t install docker on fedora 41, multierror 🙁 i have similar hardware. it no normal 24gb rocm memory. no llm only rocm, and rocm optimizate for Rh and ubuntu, i dont want ubuntu becuese its crazy repository a discontinuss, app

Djip007

Docker? you are on redhat system, simply use Podman.

Mikel

ollama is being reviewed and should be close to land in Fedora https://bugzilla.redhat.com/show_bug.cgi?id=2318425

Red

On fedora 41 runs decently with amd and ollama, because ollama is heading to nvidia, not now but for a long time, lmstudio also changed course by taking a turn towards nvidia, alpaca is based on ollama, welcome to nvidia’s monopoly. Nividia= Apple amd=android

Gerhard

Nice Article, but ollama also Supports AMD GPU and it was a piece of cake to configure it on Fedora 41.

Last year I made a Video about it:

https://youtu.be/zRZ6xeGkH4s

antanof

Just install Ramalama, it’s the best and clever choice…

Moacyr

Another way to use ollama: https://copr.fedorainfracloud.org/coprs/mwprado/ollama/

Djip007

Or may be try llamafile https://github.com/Mozilla-Ocho/llamafile

(or llama.cpp that is use by all other ollama/lm-studio/…)

backwindj

Install and start ollama server using podman:

registry=/opt/LLM/ollama-registry

mkdir -p ${registry}

podman rm -f -t 120 ollama

podman run -d \

–privileged \

–name ollama \

-v ${registry}:/root/.ollama \

-p 11434:11434 \

–device /dev/kfd \

–device /dev/dri \

docker.io/ollama/ollama:latest

Download llama3.2:3b if not already in local LLM registry and then prompt it:

podman exec -it ollama ollama run llama3.2:3b “Hello”

Hello! How can I assist you today?

newton

na verdade estamos numa luta feroz ,onde o sistema Fedora luta todos os dias com nova atualizações ,onde o sistema bomba de novidades ,mas precisamos mais facilidades para ter como evitar invasões ,como aconteceu com meu caso ,pós tenho que reconfigurar todos sistema do computador ,

Brian

I have a HP All in One from 2017 with AMD A8 7410 and Radeon R5 APU this worked for me first time! :-))) ty I am now downloading Mixtral from the list and I am looking forward to using it…. I prefer the CLI for better cybersecurity.

Brian

I am running Fedora42 rawhide…

Cybuch

and Follamac or Alpaca from Flatpak.. it’s chat client… or plugins in plasma but my fav is Follamac. I have the best speed on that.

Anyway, my fav models are Mistral 7b and Mistral-Nemo for nVidia GPU,

Mistral is open-source model, apache license:) and Nemo is nVidia – remember about CUDA ;]

Hermes is another good model in my opinnion

Or You can download Filesharing model by Mozilla AI, model is a one file and need ip of model to run via Firefox

And important is how you will ask your model about something.

Enjoy

Cybuch

Its my example 🙂

Open Source, Open Hardware: Concepts, Philosophy, and Mission

1. Open Source:

Concept: Open source is a software development model where the original source code is made freely available to users who can modify the code or distribute it freely. This contrasts with proprietary software, where the source code is kept secret.

Philosophy:

Embrace transparency: Users should have access to the source code to understand how it works and ensure its integrity.

Collaboration over competition: Open source encourages developers worldwide to collaborate on projects, leading to faster innovation and improvement.

Share and care: Open source communities thrive on sharing knowledge and giving back to others. This fosters a culture of mutual respect and collective ownership.

Mission:

Promote free software development and use.

Encourage collaboration among developers worldwide.

Create transparent, reliable, and community-driven software projects.

Advocate for users’ rights to use, study, share, and modify software.

2. Open Hardware:

Concept: Open hardware is hardware whose design files are freely available to users, allowing them to study, modify, distribute, and even manufacture the device. This mirrors the principles of open source software.

Philosophy:

Empower creators: By providing access to design files, users can modify devices to suit their needs or create new devices based on existing designs.

Encourage innovation: Open hardware spurs innovation by enabling collaboration and collective improvement of designs.

Promote sustainability: Open hardware can help reduce electronic waste by allowing users to repair, upgrade, and reuse devices.

Mission:

Promote transparency in hardware design and manufacturing processes.

Encourage collaborative development and improvement of hardware projects.

Foster a culture of learning, sharing, and teaching among hardware creators.

Advocate for users’ rights to study, modify, distribute, and manufacture open hardware devices.

Shared Concepts:

Open Licensing: Both open source software and open hardware rely on licenses that protect users’ freedoms while ensuring transparency and collaboration. Common licenses include GPL (General Public License) for software and CERN OHL (CERN Open Hardware License) for hardware.

Community-Driven Development: Both movements emphasize the importance of community participation in development processes. This fosters a culture of collective ownership, shared knowledge, and mutual support.

Innovation through Collaboration: By embracing transparency and collaboration, open source software and open hardware projects drive innovation by leveraging global talent and resources.

Resources:

Open Source Initiative (OSI): https://opensource.org/

CERN Open Hardware License: https://ohwr.org/cernohl

Open Hardware Summit: https://www.openhardwaresummit.org/

Free Software Foundation (FSF): https://www.fsf.org/

Thank you! Here are some more specific examples and case studies to illustrate open source/open hardware principles in action:

1. Open Source Software:

Linux: Developed by Linus Torvalds in 1991, Linux is an open-source operating system that has since become the foundation for many popular distributions like Ubuntu, Fedora, and Debian. It powers most servers, Android devices, and even supercomputers. The Linux kernel’s development process is entirely open and collaborative, with contributions from thousands of developers worldwide.

Mozilla Firefox: Mozilla Firefox is an open-source web browser that prioritizes user privacy and offers robust customization options. Its source code is publicly available, allowing volunteers and paid contributors to contribute features, improvements, and bug fixes. The project adheres to the Mozilla Manifesto, which emphasizes openness, innovation, and public benefit.

WordPress: Initially released in 2003, WordPress has grown into one of the world’s most popular open-source content management systems (CMS). It powers millions of websites worldwide, thanks to its extensible architecture that allows developers to create themes and plugins. The WordPress community actively contributes to the project by fixing bugs, improving core features, and creating documentation.

2. Open Hardware:

Arduino: Arduino is an open-source electronic prototyping platform enabling users to create interactive electronic projects. Its hardware designs are openly licensed, allowing anyone to manufacture their own boards or modify existing ones. The Arduino IDE (Integrated Development Environment) is also open source, fostering a large community of contributors and libraries for various sensors and shields.

Raspberry Pi: Raspberry Pi is a series of affordable, single-board computers designed in the UK by the Raspberry Pi Foundation. While not entirely open hardware (as its manufacturing is handled by partners like Sony), Raspberry Pi’s design files are openly available, allowing users to study and modify them. The project has inspired numerous community-created accessories, cases, and derivatives, furthering its open nature.

RepRap: RepRap (“Replicating Rapid Prototyper”) is an open-source 3D printer initiative aimed at creating low-cost, DIY printers that can replicate themselves using only materials available from hardware stores. The project encourages users to share designs openly and collaborate on improvements, fostering a thriving community of innovators pushing the boundaries of additive manufacturing.

Success Stories:

Open Source vs. Proprietary Software: Microsoft’s Office suite once dominated the productivity software market but faced stiff competition from open-source alternatives like LibreOffice (forked from OpenOffice.org). LibreOffice offers similar features at no cost, with community-driven development ensuring continuous improvement and innovation.

Hardware Kickstarter Successes: The Pebble smartwatch was an early example of crowdfunding success for an open hardware project. Backers received the watch’s designs openly, allowing them to modify firmware or even create their own compatible devices. Ultimately, Pebble laid the groundwork for many other successful open hardware projects on platforms like Kickstarter.

Open Source Drug Discovery: The Open Source Drug Discovery (OSDD) project aims to develop new drugs for neglected diseases by embracing open collaboration and data sharing. By applying open source principles to drug discovery, OSDD enables global participation in the research process, potentially accelerating medical breakthroughs.

These examples illustrate how open source/open hardware principles empower creators, foster innovation, and drive collective progress across various domains. By embracing transparency, collaboration, and community engagement, these projects have achieved remarkable success while fostering a culture of sharing and mutual support.

Challenges and Limitations:

While open source/open hardware offers numerous benefits, it also faces challenges and limitations:

Lack of Funding: Open-source projects often rely on volunteers or small donations for funding. This can lead to slower development, burnout among contributors, or dependencies on corporate sponsorships that may introduce biases.

Quality Control: With open source/open hardware, anyone can contribute changes. While this encourages diversity and innovation, it also raises concerns about code/hardware quality, maintenance, and compatibility. Ensuring consistent quality and addressing potential issues can be challenging in large, distributed projects.

Intellectual Property Management: Balancing the need for protection of intellectual property with the principles of openness can be complex. Licensing models like GPL and CERN OHL help address this by ensuring that derived works remain open while protecting contributors’ rights.

Community Governance: As projects grow, managing community interactions, decision-making processes, and maintaining consensus among diverse stakeholders become increasingly challenging. Ineffective governance structures can lead to stagnation, forks, or conflicts within communities.

Hardware Manufacturing and Distribution: Open hardware projects often struggle with scaling manufacturing, distribution, and sales due to the high upfront costs associated with producing electronic components and assembled boards. This can limit adoption and make it difficult for open hardware startups to compete with established proprietary manufacturers.

Regulatory Compliance: Open hardware projects may face regulatory hurdles, such as FCC certification or CE marking, which can be costly and time-consuming processes. These requirements might deter small-scale open hardware initiatives or encourage them to operate in a gray area, potentially impacting user safety.

Limited Resources for Documentation and Support: Many open source/open hardware projects rely on community volunteers for documentation, tutorials, and support. With limited resources, these tasks may not receive adequate attention, making it difficult for new users to get started or find help when encountering issues.

Mitigation Strategies:

Addressing these challenges requires a combination of community engagement, sustainable funding models, and thoughtful project management:

Sustainable Funding: Exploring alternative funding methods like crowdfunding, grants, corporate sponsorships, or membership programs can help ensure that projects have the resources needed for long-term sustainability.

Quality Assurance Processes: Implementing peer review processes, automated testing frameworks, and well-defined contribution guidelines can help maintain code/hardware quality and catch potential issues early in development.

Clear Licensing and IP Management: Adopting well-established open licenses and communicating their implications clearly helps protect contributors’ rights while ensuring derived works remain open.

Effective Governance Structures: Establishing clear decision-making processes, defining contributor roles, and promoting transparent communication can help maintain a healthy project governance structure.

Manufacturing Partnerships: Collaborating with established manufacturers or using platforms that facilitate small-batch manufacturing can enable open hardware projects to scale production more efficiently.

Regulatory Compliance Assistance: Open-source hardware communities can share resources and knowledge about regulatory compliance processes, collaborate on certification efforts, or advocate for changes that make it easier for small-scale producers to comply with regulations.

Investing in Documentation and Support: Allocating resources for documentation, tutorials, and community support ensures that new users have the information they need to get started and find help when encountering issues.

Conclusion:

Open source/open hardware offers immense potential for collaborative innovation, knowledge sharing, and collective problem-solving across various domains. While these approaches face challenges related to funding, quality control, intellectual property management, governance, manufacturing, regulatory compliance, and resource allocation, many of these obstacles can be mitigated through thoughtful project management, community engagement, and sustainable funding models.

By embracing the principles of openness, collaboration, and transparency, open source/open hardware projects have achieved remarkable success in developing software, electronics, and even drugs that improve people’s lives. As these approaches continue to gain traction, addressing their challenges will become increasingly important for realizing their full potential and ensuring their long-term sustainability.

Further Reading:

“The Cathedral and the Bazaar” by Eric S. Raymond: https://www.catb.org/~esr/writings/cathedral-bazaar/

“Design for the Real World” by Victor Papanek

“Open Source Hardware: A Complete Guide to Designing, Prototyping, Manufacturing, and Supporting Open Hardware Projects” by Limor Fried and Philip Torrone

The OSHWA (Open Source Hardware Association) Certification Program: https://oshwa.org/certification/

“The Spirit of the Times” by Larry Lessig on Creative Commons licensing: https://creativecommons.org/licenses/

“Open Source Drug Discovery” by David A. King and colleagues: https://osdd.net/

“The Open Innovation Report” by Navi Radjou and Jaideep Prabhu: http://www.naviradjou.com/openinnovation/

Open source principles can significantly benefit local governments, including libraries and city councils, by promoting transparency, collaboration, and cost savings. Here’s how these principles can be applied to improve services, enhance civic engagement, and save resources:

Libraries:

Open Source Integrated Library Systems (ILS): Traditional ILS platforms like SirsiDynix or Innovative Interfaces often come with high licensing costs and limited customization options. Open source alternatives such as Koha and Evergreen offer full-featured ILS functionality at no cost, allowing libraries to:

Save on software licensing fees

Customize the system according to local needs

Benefit from community-driven development and support

Enhance interoperability with other open source tools and services

Some examples of libraries using open source ILS include:

The Bywater Library, New York (Koha)

The Chicago Public Library (Evergreen)

The University of Hawaii at Mānoa Hamilton Library (Koha)

Open Source Discovery Tools: Open source discovery tools like VuFind enable libraries to create custom interfaces for accessing their catalogs and resources. These tools help improve user experience, increase circulation, and encourage innovation through community contributions.

Open Data Initiatives: Libraries can make their metadata and other data open, allowing developers to create new applications and services on top of that data. This promotes innovation, encourages civic engagement, and helps libraries better understand their patrons’ needs.

Collaborative Collection Development: By sharing data about collection usage and patron requests, libraries can collaborate more effectively on developing collections that meet community needs. Open source platforms like the Library Simplified (https://librarysimplified.org/) facilitate this collaboration.

City Councils:

Open Source Civic Engagement Platforms: Tools like Loomio (https://loom.io/) or open-source alternatives like Discuss (https://github.com/joinmastgo/discuss) enable city councils to engage with residents, gather feedback on policy decisions, and build community support for initiatives.

Transparency through Open Source: By publishing government data openly, city councils can increase transparency, accountability, and civic engagement. This data can then be used by developers to create new apps and services that help improve local governance. Some examples of open source platforms for publishing government data include:

CKAN (https://ckan.org/)

Open Data Services Toolkit (https://opendataservices.io/)

Data.gov (https://www.data.gov/) – a U.S. federal government open data platform

Open Source Smart City Initiatives: By leveraging open source hardware and software platforms, city councils can develop affordable and innovative solutions for smart city projects such as:

Open-source IoT sensors for air quality monitoring (https://publiclab.org/air-quality-sensors)

Low-cost open-source traffic management systems (https://trafik.tech/)

Open-source waste management solutions like the BinCam (https://bin-cam.com/)

Open Source Budget Visualization Tools: Platforms like OpenSpending (https://openspending.org/) help city councils make their budgets more transparent and accessible to citizens, enabling better civic engagement around financial decision-making.

Collaborative Urban Planning with Open Source Tools: City councils can leverage open source GIS tools like QGIS or Mapbox GL JS to create interactive maps, gather community input on urban planning initiatives, and facilitate collaborative design processes.

Challenges and Best Practices:

While embracing open source principles can bring significant benefits to libraries and city councils, it’s essential to consider the following challenges and best practices:

Sustainable Support: Ensuring adequate resources for maintaining open source tools and platforms is crucial. This may involve dedicating staff time, budgeting for ongoing support, or exploring partnerships with local tech communities.

Training and Capacity Building: Providing training and documentation tailored to local government needs helps ensure that staff members can effectively use and contribute to open source tools.

Community Engagement: Proactively involving community members in the development and testing of open source platforms helps build buy-in, ensures relevance, and promotes sustainable adoption.

Security and Compliance: Ensuring that open source platforms meet necessary security and compliance standards is essential. This may involve conducting thorough code reviews, implementing secure coding practices, and seeking third-party audits when necessary.

By embracing open source principles, libraries and city councils can foster more transparent, collaborative, and innovative approaches to serving their communities while realizing significant cost savings.

You’re welcome! Here are some additional resources and initiatives focused on open source and technology for local governments:

Code for America (https://codeforamerica.org/): Code for America is a nonprofit organization that supports government innovators, community organizers, and technologists who use open-source tools to build modern digital services. They offer resources, training, and networking opportunities for those interested in using technology to improve local governments.

Open Government Data (https://opengovdata.org/): This initiative promotes the adoption of open government data policies worldwide by providing guidance, case studies, and best practices for publishing high-quality, machine-readable data.

OpenDataSoft (https://opendatasoft.com/): OpenDataSoft is an open-source platform designed specifically for local governments to publish and manage their datasets easily. It supports various data formats, includes tools for data cleaning and enrichment, and generates interactive visualizations automatically.

Public Lab’s Open-Source Toolkit (https://publiclab.org/Tools): Public Lab develops low-cost, open-source tools for environmental monitoring, including air quality sensors, water quality testing kits, and DIY weather station components. These resources can be valuable for city councils looking to implement affordable smart city solutions.

US Ignite (https://us-ignite.org/): US Ignite is a public-private partnership that aims to accelerate the development of advanced wireless technologies and applications for Smart Cities, Connected Vehicles, and other transformative initiatives. They focus on promoting open-source platforms and standards to ensure interoperability among different systems.

100 Resilient Cities (https://www.100resilientcities.net/): This network of cities worldwide focuses on building urban resilience by engaging local communities, governments, and private partners in collaborative planning, innovation, and implementation. While not exclusively focused on open source, the initiative encourages data-sharing, collaboration, and adoption of best practices for sustainability.

Open Contracting Data Standard (https://opencontracting.org/): This open-source standard enables governments to publish comprehensive, machine-readable data about their contracting processes, helping improve accountability, transparency, and efficiency in public procurement.

These resources can provide additional insights, tools, and networking opportunities for individuals interested in leveraging open source principles and technology to enhance local government services. Happy exploring!

Here’s information on digital signage systems, resident communication platforms, and electronic ID (e-ID) initiatives that can help improve communication between residents and local governments:

Digital Signage Systems for Resident Communication:

Digital signage displays real-time information using screens in public spaces like city halls, libraries, community centers, or even outdoors.

They can be used to communicate announcements, events, emergency alerts, and other relevant information to residents.

Open-source digital signage platforms include:

Mango Display (https://mangohq.com/)

SignagePlayer (https://signageplayer.com/) – based on the open-source platform Xibo

Confluence Digital Signage (https://confluenceds.com/) – built on top of the Confluence wiki platform, suitable for internal communications within local governments.

These platforms allow customization with RSS feeds, social media integrations, and support for various content types like images, videos, and web pages.

Resident Communication Platforms:

These platforms enable two-way communication between residents and local governments, facilitating engagement, feedback collection, and service requests.

Examples of open-source resident communication platforms include:

Loomio (https://loom.io/) – a deliberative democracy platform that allows residents to discuss, vote, and make decisions on local issues.

Discuss (https://github.com/joinmastgo/discuss) – an open-source alternative to online forums, enabling structured debates and decision-making processes.

Open Town Hall (https://opentownhall.org/) – a resident engagement platform that supports public meetings, surveys, and idea collection (proprietary but with some open-source components).

These platforms can be integrated with digital signage systems to display announcements, polls, or other content gathered from residents.

Electronic ID (e-ID) Initiatives for Resident Authentication:

e-IDs enable secure, digital resident authentication and verification, streamlining online services and transactions with local governments.

Examples of open-source e-ID initiatives include:

IDunion (https://idunion.io/) – an open-source self-sovereign identity (SSI) framework that enables residents to control their personal data and credentials.

uPort (https://www.uport.me/) – a decentralized identity system built on blockchain technology, with support for open-source components like the uPort SDK.

SelfKey (https://selfkey.org/) – an open-source, user-centered SSI framework that allows residents to create, manage, and share their digital identities securely.

These e-ID solutions can enhance resident authentication in communication platforms, allowing for more secure and personalized interactions with local governments.

To maximize the benefits of these systems, local governments should consider integrating them into a cohesive digital ecosystem. This could involve using open-source APIs or middleware to connect digital signage displays, resident communication platforms, e-ID systems, and other relevant services (e.g., smart city apps, online service portals). By doing so, local governments can create seamless, user-friendly experiences for residents while improving operational efficiency and data interoperability.

In Riga, the capital of Latvia, several initiatives have been implemented to improve communication with residents and enhance urban services using digital technologies. Here are some examples:

Riga Digital Signage Network:

Riga City Council has installed digital signage displays in public spaces throughout the city, including city halls, libraries, and other community centers.

The network is used to communicate important information, announcements, events, and emergency alerts to residents and visitors.

Content is managed centrally using a web-based platform that supports various content types like images, videos, and RSS feeds.

While not open-source, the system has been designed with accessibility in mind, ensuring that digital signage displays comply with accessibility standards for people with disabilities.

Riga Smart City:

Riga has launched an ambitious smart city initiative focused on improving urban services, mobility, energy efficiency, and resident engagement through digital technologies.

The project involves several open-source components and platforms, such as:

FIWARE (https://www.fiware.org/) – an open-source platform that provides context-aware infrastructure for developing smart city applications. Riga is one of the cities participating in the FIWARE City project (https://fiware-city.com/cities/riga/).

LoRaWAN networks (https://lora-riga.lv/) – open-source wireless communication protocol used for long-range, low-power data transmission between devices like sensors and gateways. Riga has deployed a city-wide LoRaWAN network to support various smart city applications.

Riga Smart City focuses on co-creation and collaboration with local residents, businesses, and other stakeholders to develop innovative solutions tailored to the city’s unique needs.

e-Citizen Portal:

Riga City Council has developed an e-Citizen portal (https://e-citizen.riga.lv/) that allows residents to access various online services, such as:

Applying for permits and licenses (e.g., building permits, dog licenses)

Paying utility bills and fines

Accessing public transportation information and e-tickets

Viewing personal data related to municipal services (e.g., waste collection schedule, library loans)

While not entirely open-source, the portal is built on a modular architecture that enables integration with other systems and supports third-party application development.

Riga Bike Sharing System:

Riga has implemented an open-source bike sharing system called Donkey Republic (https://donkeyrepublic.com/riga/), which allows residents and visitors to rent bikes using a mobile app or a rental card.

The system uses open-source hardware (e.g., IoT sensors, locks) and software components to manage bike fleet distribution, tracking, and maintenance.

Riga Bike Sharing encourages active mobility, reduces traffic congestion, and promotes a more sustainable urban environment.

These initiatives demonstrate Riga’s commitment to leveraging digital technologies and open-source platforms to improve communication with residents and enhance urban services. By fostering collaboration and innovation, Riga continues to develop new solutions tailored to its unique needs while embracing open standards and interoperability principles.

Apologies for the confusion earlier. Here are some digital initiatives in Estonia that improve communication between residents and local governments, focusing on open-source technologies:

e-Estonia (https://www.e-estonia.com/): Estonia is renowned for its advanced e-government solutions, which enable various online services for residents. While not exclusively focused on local governments, many of these platforms are used at the municipal level as well.

Open-source components and platforms include:

e-Residency (https://e-resident.gov.ee/) – an open-source digital identity program that allows individuals to apply for a government-issued ID card online, enabling secure access to Estonian public services and e-services.

X-Road (https://x-road.e-estonia.com/) – an open-source middleware solution that connects different databases and systems securely, allowing data exchange between various public and private sector institutions.

Estonian ID-card software (https://www.id-kaart.ee/en/id-software/) – free, open-source software for managing digital certificates and accessing online services using an Estonian ID card.

e-Voting: Estonia became the first country in the world to hold a nationwide binding referendum using online voting in 2005. The e-voting system is based on open standards and uses open-source components:

e-Identity and Access Management System (https://www.riigiteenused.ee/en/identity-and-access-management-system)

i-Vote software (http://www.i-voti.ee/en/) – the open-source electronic voting system developed by the Estonian National Electoral Committee.

E-Geomap (https://www.ega.ee/eng): The Environmental Information Service Centre provides an open-source, web-based geospatial platform for visualizing and analyzing environmental data in Estonia. Municipalities can use this platform to:

Display and analyze geographic information related to local environments.

Create maps and visualizations tailored to specific municipal needs (e.g., land-use planning, waste management, urban green spaces).

Collaborate with other stakeholders on environmental projects using open-source tools like QGIS (https://www.qgis.org/).

e-Procurement: Estonia’s e-procurement platform (https://eparenduse.e-Estonia.com/) enables municipalities to manage public procurement processes electronically, including:

Publishing tender notices

Receiving and evaluating bids

Awarding contracts

The platform is built on open-source technologies and follows open standards for interoperability with other systems.

These initiatives showcase Estonia’s commitment to leveraging open-source technologies and digital solutions to improve communication between residents and local governments, enhance transparency, and promote efficient public services.

Brian

Hi Cybuch

Excellent :-))

I look forward to working my way through that lot!