Looking for an open source personal assistant ? Mycroft is allowing you to run an open source service which gives you better control of your data.

Install Mycroft on Fedora

Mycroft is currently not available in the official package collection, but it can be easily installed from the project source. The first step is to download the source from Mycroft’s GitHub repository.

$ git clone https://github.com/MycroftAI/mycroft-core.git

Mycroft is a Python application and the project provides a script that takes care of creating a virtual environment before installing Mycroft and its dependencies.

$ cd mycroft-core

$ ./dev_setup.sh

The installation script prompts the user to help him with the installation process. It is recommended to run the stable version and get automatic updates.

When prompted to install locally the Mimic text-to-speech engine, answer No. Since as described in the installation process this can take a long time and Mimic is available as an rpm package in Fedora so it can be installed using dnf.

$ sudo dnf install mimic

Starting Mycroft

After the installation is complete, the Mycroft services can be started using the following script.

$ ./start-mycroft.sh all

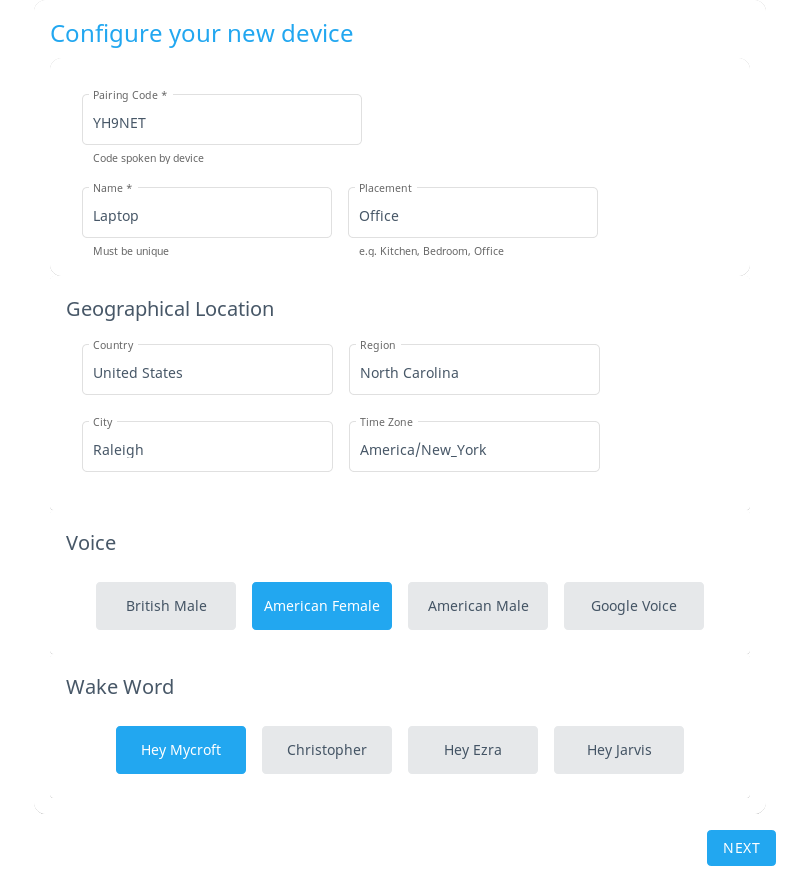

In order to start using Mycroft the device running the service needs to be registered. To do that an account is needed and can be created at https://home.mycroft.ai/.

Once the account created, it is possible to add a new device at the following address https://account.mycroft.ai/devices. Adding a new device requires a pairing code that will be spoken to you by your device after starting all the services.

The device is now ready to be used.

Using Mycroft

Mycroft provides a set of skills that are enabled by default or can be downloaded from the Marketplace. To start you can simply ask Mycroft how is doing, or what the weather is.

Hey Mycroft, how are you ?

Hey Mycroft, what's the weather like ?

If you are interested in how things works, the start-mycroft.sh script provides a cli option that lets you interact with the services using the command line. It is also displaying logs which is really useful for debugging.

Mycroft is always trying to learn new skills, and there are many way to help by contributing the Mycroft community.

Photo by Przemyslaw Marczynski on Unsplash

Daniel

Why do I need an account with a cloud service if this is supposed to be an open source assistant service running on my own device? or is it? Is it just the client that is open source with all the good stuff locked behind the cloud service?

Clément Verna

The speech to text processing is currently done remotely on the Mycroft server, but there is an open discussion to be able to run a personal server (https://community.mycroft.ai/t/the-mycroft-personal-server-starting-the-conversation/4691/8) .

Chris

Most of the good stuff runs on the device, including the core voice assistant software and the skills you install. Some skills need to reach out to the internet to work. For example, the weather skill needs to call a weather API to get your local forecast. However, this is not why the account is necessary. The cloud service is currently the only way to interact with skill settings (unless you want to ssh into your device). Cloud accounts are also how Mycroft tracks your agreement to its Terms of Service and Privacy Policy.

Lyes Saadi

Wow, it’s awesome ! Tried it, and it works very well ! Even if, with my « frainch acent » he has some trouble when waking him up, but, he understands me pretty well 🙂 ! That will really be good to promote Linux to those who like personal assistants.

Isaque Galdino

Does it run on a Raspberry Pi?

Clément Verna

Yes, if you check their get started page, there is a dedicated section for raspberry pi (https://mycroft.ai/get-started/)

Eric Nicholls

I came looking for copper and found gold. Thank you for this article.

JCjr

Where is the audio processing done?

I ask, because what’s been shown time and time again is these talking tube devices do all the audio processing on their respective Internet services, and are listening in on every word spoken and every sound made despite the marketing describing that they use a “wake-up word”. In reality, the “wake-up word” is just a command to get it to respond to the following sounds, but the microphone is always on – it has to be for the wake-up word to work.

Also, the Mycroft site on which you need to register your device for it to work has a really vague privacy policy.

Clément Verna

The speech to text processing is currently done remotely on the Mycroft server, but there is an open discussion to be able to run a personal server (https://community.mycroft.ai/t/the-mycroft-personal-server-starting-the-conversation/4691/8) .

The other good thing is that since it is open source, you can go and check that the device is not listening to ambient noise when it is not suppose too 🙂

JCjr

How do you propose to do that?

The system is always listening for a wake up word, and you say audio processing is done at the remote server, meaning they have ALL of the audio data. I have no idea what code they are using on their end, nor do I have any insight on how or if they monetize the audio data (I mean c’mon….it’s pretty much a given that all companies monetize data stored or streamed to their systems in this day and age).

I don’t see much difference between this and Alexa or Google Assistant TBH – UNLESS they do release software to run your own audio processing locally without their required service. Remember: even Android is based on open source software. And it’s probably one of the biggest spycraft kits available.

Dominik

No, Mycroft does the wake word listening locally (either by Mycroft-Precise for pre-learned word or PocketSphinx for custom wake words).

For best results speech-to-text of the actual “utterance” (after the wake-word) Mycroft is using Google-TTS via an anonymizing proxy. With some additional installation and configuration you can use local STT via Kaldi or PocketSphinx, but their generic models have less precision.

English text-to-speech synthesis can be done locally with Mimic1 or with better quality by Mimic2 (via Mycroft “cloud”).

There are some more alternatives for TTS and STT.

JCjr

“Mycroft is using Google-TTS via an anonymizing proxy.”

Google. That’s telling.

And what proxy?

Pcwii

As an active member of the Mycroft community i can assure you that the wake word processing is done locally on your device.

And the team is very serious about not collecting personal identifiable information.

JCjr

Then why is this not spelled out in their vague privacy policy that is almost identical to Google’s?

jakfrost

“I have no idea what code they are using on their end”

You do since the code is open sourced.

JCjr

You assume that they’re using that code as-is. Android on a phone isn’t the same as the source code on AOSP.

Jurando

And you assume bad faith on their part, all throughout your questioning and even after all of them have been sufficiently cleared up for everybody else. Why is that?

Paul W. Frields

Although it makes sense to ask questions about processing, I don’t see the point in questioning motive further in this case. Let’s consider that topic closed on this venue, please, and move on.

JS

There is a local STT engine that listens for the wake up word, since there is no such good quality required. Transmission to the remote server starts after that for few seconds.

giuseppe

it is better to run everyghin inside a sandboxed python env.

change are that something will be messed up (dnf…)

Vanga

This stuff rotted. They changed this stuff license from gpl to apache. Full closing in success case it is question of time.

Mark Dzmura

Apparently there are two speech recognition systems at work in Mycroft:

A (relatively) simple, local recognizer is responsible for detecting the wakeup word/phrase. (By local I mean that it is running entirely on the local host, not in the cloud.)

The actual command recognition takes place in the cloud. If my understanding was correct, the default recognizer is actually Google’s service – which explains the extremely high quality speech recognition that I have experienced with Mycroft.

(Over time you will note that the number of false positive detections of “Hey Mycroft” is pretty high).

Ultimately many would prefer the use of a high-quality local recognizer component, but it will probably be a long while before an open-source recognizer with the phrase-level accuracy of Google’s is available.

Ruados

Is there an option of just text? I find the idea of a personal assistant interesting, just not the idea of talking with my computer like a madman.

Doug

Yes. Use the command “./start-mycroft.sh cli”

This is the command to start its command line interface. You can’t then type question to it and it will reply with voice and a text written answer.

Mehdi

Happy to hear about this. Makes me hopeful in open source assistants progress

Mehdi

I think due to the open nature of the project, it is perfectly possible to run a personal server. Isn’t it?

23r

can I use radio wifi starting voice and stoping button?

is Polish language translation?

Yassir

There is a Mycroft AI Plasmoid for KDE Plasma 5 Desktop

https://mycroft.ai/documentation/plasma/

Eugene

OK, I’m not much up-to-date with Linux conspiracy theories, but I think the previous discussion about “openness” of Mycroft boils down to one question, which is important to me.

Is Mycroft always going to be free? Can you guarantee it, at least for some years to come?

Or are we all supplying training data for a planned commercial product? I’m obviously not talking about privacy. Rather, I don’t want to waste my time and computer resources training AI for commercial products, unless I’m payed to do so.