Pipewire is a multimedia server and framework available as the default sound server in the latest Fedora versions. It is, by design, for low-latency audio and video routing and processing. It is capable of multiplexing multimedia streams to multiple clients – an important feature of modern operating systems.

General considerations

The evolution of the Linux sound subsystem happened in layers. The lowest layer is the hardware layer with various audio devices. To interact with hardware drivers, Linux has a standardized API called Advanced Linux Sound Architecture (ALSA). Another layer above ALSA, a sound server, should handle interactions with userspace applications. Initially, that layer was Pulseaudio and Jack, but it was recently replaced by Pipewire. This is an outsider’s first steps with Pipewire. The current version is 1.2.7 on Fedora 41 Workstation.

Pipewire is a socket-activated systemd user service. Note that the session manager is an alias for wireplumber.service.

rg@f41:~$ systemctl --user list-unit-files "pipewire*"

UNIT FILE STATE PRESET

pipewire.service disabled disabled

pipewire.socket enabled enabled

pipewire-session-manager.service alias -

Permissions and Ownership

The current user owns the process. However, each logged in user has its instance of pipewire.

rg@f41:~$ ps -eo pid,uid,gid,user,comm,label | grep pipewire

2216 1000 1000 rg pipewire unconfined_t

Most multimedia device files to be accessed by this process are located at /dev/snd and /dev/video .

rg@f41:~$ ls -laZ /dev/video* /dev/media* /dev/snd/*

crw-rw----+ root video system_u:object_r:v4l_device_t:s0 /dev/video0

crw-rw----+ root video system_u:object_r:v4l_device_t:s0 /dev/media0

crw-rw----+ root audio system_u:object_r:sound_device_t:s0 hwC0D0

crw-rw----+ root audio system_u:object_r:sound_device_t:s0 pcmC0D0c

...

Permissions are 660 and restricted to owners that are root:video and root:audio. Notice the extra + that indicates ACL permissions. Systemd-logind is the Fedora login manager. This service will, as part of the login process, execute a binary at /usr/lib/systemd/systemd-logind. It will create a session and assign a seat. It will change, at login, the file access control list of multimedia devices to the current user. This is how Pipewire has access to device files otherwise owned by root:audio or root:video. To check the current file ACL:

rg@f41:~$ getfacl /dev/video0

# file: dev/video0

# owner: root

# group: video

user::rw-

user:rg:rw- # current user

group::rw-

mask::rw-

other::---

The command lsof can show all the processes accessing our sound and video devices:

rg@f41:~$ lsof | grep -E '/dev/snd|/dev/video*|/dev/media*'

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

pipewire 53799 rg 44u CHR 116,9 0t0 913 /dev/snd/controlC0

pipewire 53799 rg 83u CHR 81,0 0t0 884 /dev/video0

wireplumb 53801 rg 24u CHR 116,9 0t0 913 /dev/snd/controlC0

...

You should expect to see only Pipewire and its session manager (wireplumber) accessing your multimedia devices. Legacy apps accessing these subsystems directly will show up here as well.

Configuring

Configuration file /usr/share/pipewire/pipewire.conf is owned by root:root and contains system defaults. Be sure to check the comments; you will find, among other things, the list of loaded modules. The service is configurable with homedir drop-in configuration files. The process is lightweight with only 3 threads. The following demonstrates increasing the number of data loops as a small test.

rg@f41:~$ cat <<EOF > ~/.config/pipewire/pipewire.conf.d/loops.conf

context.properties = {

context.num-data-loops = -1

}

EOF

rg@f41:~$ systemctl --user daemon-reload

rg@f41:~$ systemctl --user restart pipewire.service

rg@f41:~$ ps -eLo pid,tid,command,comm,pmem,pcpu | grep pipewire

PID TID COMMAND COMMAND PMEM PCPU

40187 40187 /usr/bin/pipewire pipewire 0.3 0.0

40187 40193 /usr/bin/pipewire module-rt 0.3 0.0

40187 40194 /usr/bin/pipewire data-loop.0 0.3 0.0

40187 40195 /usr/bin/pipewire data-loop.1 0.3 0.0

40187 40196 /usr/bin/pipewire data-loop.2 0.3 0.0

...

With pw-config you can check the new state of our updated configuration:

rg@f41:~$ pw-config

{

"config.path": "/usr/share/pipewire/pipewire.conf",

"override.config.path": "~/.config/pipewire/pipewire.conf.d/loops.conf"

}

Some architectural considerations

Pipewire consists of around 30k lines of code in the C programming language. The architecture resembles an event-driven producer-consumer pattern. The control structures are graph-like structures with producer and consumer nodes. One reason for achieving low latency and low CPU utilization is taking advantage of a concept called zero-copy via memfd_create. Signaling among the loosely coupled components is achieved using eventfd. The code is extensible and modular.

The entire Pipewire state is a graph with the following main elements:

- Nodes (with input/output ports)

- Links (edges)

- Clients (user processes)

- Modules (shared objects)

- Factories (module libraries)

- Metadata (settings)

The graph has nodes and edges/links. Nodes with only input ports are called sinks, and those with only output ports are called sources. Nodes with both are usually called filters.

Modules

Each module provides a specific server functionality. Spend some time screening the module names. There is a module that manages access among graph components, a module managing thread priorities, a module that emulates former Pulseaudio, a module for XDG Portal, a module for profiling, and even a module implementing streaming over the network via RTP.

rg@f41:~$ ls -la /usr/lib64/pipewire-0.3/

-rwxr-xr-x. 1 root root 28296 Nov 26 01:00 libpipewire-module-access.so

-rwxr-xr-x. 1 root root 98808 Nov 26 01:00 libpipewire-module-avb.so

...

Plugins

Plugins handle communication with devices, including the creation of ring buffers, used heavily in multimedia processes. There is a plugin that handles communication with ALSA, a plugin that manages communication with Bluetooth, libcamera, etc.

rg@f41:~$ ls -la /usr/lib64/spa-0.2/

drwxr-xr-x. 1 root root 28 Dec 11 12:13 alsa

drwxr-xr-x. 1 root root 420 Dec 11 12:13 bluez5

...

Plugins extend a generic API called SPA, or Simple Plugin API. These self-contained shared libraries provide factories containing interfaces. Because they are self-contained, some of these plugins are used by the session manager. Utility spa-inspect allows querying the shared objects for factories and interfaces.

Useful Commands

We can see (R)unning nodes with pw-top. In the following example, there are 3 nodes currently running. Any streaming performed via Pipewire will appear in this output table.

rg@f41:~$ pw-top

S ID QUANT RATE WAIT BUSY FORMAT NAME

R 90 512 48000 139.8us 23.0us S16LE 2 48000 bluez_output..

R 99 900 48000 66.7us 37.7us F32LE 2 48000 + Firefox

R 112 4320 48000 105.5us 11.4us S16LE 2 48000 + Videos

Quantum (QUANT) can be thought of as the number of audio samples (buffer size) to be processed each graph cycle. This varies depending on the device type and can be configured or negotiated via configuration files. For example, the Videos application has a buffer size of 4320 samples, while the Bluetooth output node has only 512.

Rate (RATE) is the graph processing frequency. In the above output, the graph operates at 48 kHz, meaning each second the graph can process 48,000 samples.

The ratio between Quantum and Rate is the latency in seconds. In the case of Bluetooth headsets, the latency is 11 ms, while for the Videos app it is 90 ms. The pw-top man page provides links to better explanations. Understanding these indicators can help boost performance.

Another powerful utility is pw-cli . It opens a shell and allows you to operate interactively on the Pipewire graph at runtime. For permanent changes, you need to amend the configuration files.

rg@f41:~$ pw-cli h

Available commands:

help | h Show this help

load-module | lm Load a module.

unload-module | um Unload a module.

connect | con Connect to a remote.

disconnect | dis Disconnect from a remote.

list-remotes | lr List connected remotes.

switch-remote | sr Switch between current remotes.

list-objects | ls List objects or current remote.

info | i Get info about an object.

create-device | cd Create a device from a factory.

create-node | cn Create a node from a factory.

destroy | d Destroy a global object.

create-link | cl Create a link between nodes.

export-node | en Export a local node

enum-params | e Enumerate params of an object

set-param | s Set param of an object

permissions | sp Set permissions for a client

get-permissions | gp Get permissions of a client

send-command | c Send a command <object-id>

quit | q Quit

For example, we can print the above streaming nodes with pw-cli info <id> and inspect all the properties.

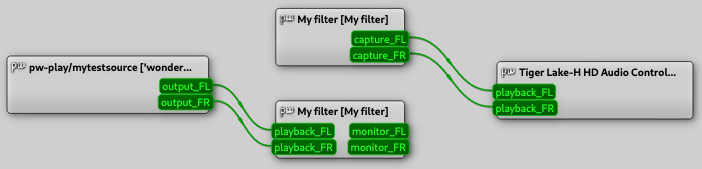

Graph visualization

All the derived utilities are processing the same large JSON graph that represents the Pipewire state. With pw-dump we will get this state. But the output is hard to read. Another utility pw-dot will give us a user-friendly graph. Take note of the following 3 steps, as you will need to repeat them during this tutorial. The dot utility used in the following example are available with Graphviz installation.

rg@f41:~$ pw-dot --detail --all

rg@f41:~$ dot -Tpng pw.dot -o pw.png

rg@f41:~$ loupe pw.png

It is a large graph. Take a minute to look at the various nodes, the node types distinguished by different colors, and some meaningful properties like the ports, the media class, and the links. You can find a description of these properties in man 7 pipewire-props. Observe the media.name property that indicates the current song playing in Firefox.

Most of the streams you will see here will be Source/Audio streams.

Fedora 41 Workstation’s new default camera app is Snapshot. Start the app and you will notice it is visible to Pipewire. Let’s keep Snapshot running and open another camera app. Don’t forget to install ffmpeg-free for the following steps.

rg@f41:~$ ffplay -f v4l2 -i /dev/video0

/dev/video0: Device or resource busy

ffplay is trying to access /dev/video0 directly. According to kernel docs, shared data streams should be implemented by a userspace proxy and not by the v4l2 drivers. Pipewire is already using the libcamera api, as you have seen in the plugins list, and offers pw-v4l2 as a compatibility wrapper. Let’s use the same command again.

rg@f41:~$ pw-v4l2 ffplay -f v4l2 -i /dev/video0

This time it worked, and we have 2 apps that share the same camera stream. This is the added value brought by a high-performing multimedia server. We also see both Source/Video streams in the graph. The same is valid for the browser. In your browser configuration you should be able to choose whether to use Pipewire or v4l2 devices.

You can also use Helvum or Qpwgraph. Go ahead and test any of these apps. You will find a simplified graph with only certain node types and drag-and-drop features. You will appreciate the ease of use, but sometimes you will need the full graph and the full set of node properties. In such cases, you will revert to pw-dump, pw-cli, and pw-dot.

Managing nodes

One way to manage temporary graph nodes is to use pw-cli. For example, here we are creating a temporary test node, valid for as long as the session is open. For factory names, check the source code and some useful examples in the conf page comments.

rg@f41:~$ PIPEWIRE_DEBUG=2 pw-cli

pipewire-0>> create-node spa-node-factory factory.name=support.node.driver node.name=mynewnode

Another way to create nodes, but this time in our permanent configuration, is as described in the configuration comments:

rg@f41:~$ cat <<EOF > ~/.config/pipewire/pipewire.conf.d/mynewnode.conf

context.objects = [{

factory = adapter

args = {

factory.name = api.alsa.pcm.source

node.name = "alsa-testnode"

node.description = "PCM TEST"

media.class = "Audio/Source"

api.alsa.path = "hw:0"

api.alsa.period-size = 1024

audio.format = "S16LE"

audio.channels = 2

audio.position = "FL,FR" }

}]

EOF

rg@f41:~$ systemctl --user daemon-reload

rg@f41:~$ systemctl --user restart pipewire.service

A faster and easier way to create nodes is to use helper utilities pw-record and pw-play to create sinks and sources. We will make a small example in the last paragraph.

Security considerations

The binary is owned by root and can be executed by other users.

rg@f41:~$ ls -la /usr/bin/pipewire

-rwxr-xr-x. 1 root root 20104 Nov 26 01:00 /usr/bin/pipewire

The protocol used for socket communication is called native protocol. Sockets have larger permissions, as do other systemd temporary files. It’s good to remember that /run is a tmpfs in-memory filesystem.

rg@f41:~$ ls -laZ /run/user/1000/pipewire*

srw-rw-rw-. rg rg object_r:user_tmp_t:s0 pipewire-0

-rw-r-----. rg rg object_r:user_tmp_t:s0 pipewire-0.lock

srw-rw-rw-. rg rg object_r:user_tmp_t:s0 pipewire-0-manager

-rw-r-----. rg rg object_r:user_tmp_t:s0 pipewire-0-manager.lock

The systemd sockets create the stream structures; afterwards, the audio nodes will communicate directly via memfd. According to the man page, memfd_create creates an anonymous, in-memory file, vital for performance. Pipewire source code uses these calls with certain flags like MFD_HUGETLB and also flags like MFD_ALLOW_SEALING, MFD_CLOEXEC, etc. to seal and prevent memory leaks to other processes.

Pipewire comes with a module called protocol-pulse. The module has a separate systemd socket-activated user service. This emulates a PulseAudio server for compatibility with a large range of clients still using the PulseAudio libraries.

rg@f41:~$ ls -laZ /run/user/1000/pulse/native

srw-rw-rw-. rg rg user_tmp_t:s0 /run/user/1000/pulse/native

Systemd offers ways to analyze and sandbox services. Some recommendations are available in the following but they do not assure system-level protection.

rg@f41:~$ systemd-analyze --user --no-pager security pipewire.service

Any userspace application can view the graph, access the socket, and create nodes. The node properties carry, in some cases, information that might be private. The media.name property is an example. This does not happen for private Firefox tabs. This property might be useful for certain GUI features, like Gnome tray notifications.

You may have noticed a node called speech-dispatcher randomly connected to your speaker. This accessibility feature may be requested by various programs, including your browser. Try to understand this behavior in your favorite multimedia apps.

Example

As a small example, let’s try to create an audiobook – voice recognition stream with Pipewire source and sink nodes. Let’s create the source node using pw-play. In other scenarios, pw-play can read from stdin (-).

rg@f41:~$ curl https://ia600707.us.archive.org/8/items/alice_in_wonderland_librivox/wonderland_ch_10_64kb.mp3 --output test.mp3

rg@f41:~$ ffmpeg -i test.mp3 -ar 48000 -ac 1 -sample_fmt s16 test.wav

rg@f41:~$ pw-play --target=0 --format=s16 \

--quality=14 --media-type=Audio --channels=1 \

--properties='{node.name=mytestsource}' test.wav

Auto-connection of the process to the speakers did not occur because of the target argument. Let’s create the sink node. For simplicity we will use the pocketsphinx package to test voice recognition.

rg@f41:~$ dnf install pocketsphinx

rg@f41:~$ pw-record --media-type=Audio --target=0 --rate=16000 \

--channels=1 --quality=14 --properties='{node.name=mytestsink}' - \

| pocketsphinx live -

As you can see, we created a sink node. Auto-connection did not occur to any source node, as indicated by the target argument. The other arguments, like the number of channels, are recommendations from Pocketsphinx man pages. We will create the links manually. Use pw-dot to find the source and sink node and port ids. Alternatively, you can use any of the two flatpak apps to create the links by drag-and-drop.

rg@f41:~$ PIPEWIRE_DEBUG=2 pw-cli

pipewire-0>> create-link <nodeid> <portid> <nodeid> <portid>

We created a stream between a source and a sink and can already see some output in the Pocketsphinx terminal. The processes involved are visible to Pipewire and can take advantage of all its capabilities. In a similar way, you can interface with your favorite Flatpak. Many of these apps exist in a sandbox and interact with the underlying system using the XDG Portal APIs.

Another example

Let’s try to make another example, involving filters. We have previously seen that Pipewire has a module called filter-chain. First of all, we will need to install the desired filter plugins. There is a large variety of LADSPA and LV2 filter plugins in DNF repos. Pipewire also has a few built-in filters made available by the audiomixer plugin.

rg@f41:~$ dnf install ladspa ladspa-rev-plugins

rg@f41:~$ rpm -ql ladspa-rev-plugins

/usr/lib64/ladspa/g2reverb.so

As we can see, the package installed a shared object. For LADSPA plugins we can extract metadata using the analyseplugin utility. Important information is visible in the following output:

rg@f41:~$ analyseplugin /usr/lib64/ladspa/g2reverb.so

Plugin Name: "Stereo reverb"

Plugin Label: "G2reverb"

Ports: ...

"Room size" input, control, 10 to 150

"Reverb time" input, control, 1 to 20

"Input BW" input, control, 0 to 1

"Damping" input, control, 0 to 1

"Dry sound" input, control, -80 to 0

"Reflections" input, control, -80 to 0

"Reverb tail" input, control, -80 to 0

Based on this we can create the filter file. Make sure the plugin name, location, label, and type are correct.

rg@f41:~$ cat <<EOF > ~/.config/pipewire/pipewire.conf.d/myfilter.conf

context.modules = [

{

name = libpipewire-module-filter-chain

args = {

node.description = "My filter"

media.name = "My filter"

filter.graph = {

nodes = [

{

type = ladspa

name = "Stereo reverb"

plugin = "/usr/lib64/ladspa/g2reverb.so"

label = "G2reverb"

control = {

"Reverb time" = 2

}

}

]

}

audio.channels = 2

audio.position = [ FL FR ]

capture.props = {

node.name = "myfilter"

media.class = Audio/Sink

node.target = 0

}

playback.props = {

node.name = "myfilter"

media.class = Audio/Source

node.target = 0

}

}

}

]

EOF

rg@f41:~$ systemctl --user restart pipewire.service

Check the graph, for example with Qpwgraph, to show the filter sink/source pair. Note that the sink node also has monitor ports. These ports allow inspection of the stream before processing. Now create the source node as before:

rg@f41:~$ pw-play --target=0 --format=s16 --media-type=Audio \

--properties='{node.name=mytestsource}' test.mp3

Connect the nodes manually by drag-and-drop to your Audio Controller Speaker. Your setup should appear as below:

Confirm the narrator’s voice has a reverb effect. Now feel free to experiment with your favorite plugins or more nodes in your filter. The filter-chain folder contains other useful examples. Remember to delete the test configurations from the .config folder when you complete you exploration.

Conclusions

Pipewire is an interesting piece of low-level, high-performing software. We have discussed the main commands and configuration options and filesystem interactions. Reading the Pipewire docs is highly recommended. “Linux Sound Programming” by Jan Newmarch will also provide insight into historical aspects. Hopefully you will have enough context to better understand the encountered terms. Thanks to the people maintaining the mentioned packages.

Julia

About multimedia: always when i tried Fedora Linux the video playback on sites like Reddit, Twitch and X don’t work !

“your browser encountered an error while decoding the video. [Error #3000”] or similar. Then again on Linux Mint all sites video and other video play after install but i wuld like use Fedora as apps are newer in it but basic things don’t work and i’m not tech sawwy at all :/

Hammerhead Corvette

This sounds like a Browser and codec issue. You should post in the forums to get help on how to update and fix those issues. It’s fairly simple fix most new to fedora users have since Fedora does not ship proprietary codecs. –> https://discussion.fedoraproject.org/

Aditya Sharada

IIRC you need some skills in console and coding to get videos to workin in modern ways on fedora. Youtube should work fine though.

If you look this article too you can see pretty much all about coding in console. Fedora is an advanced distrobution for coders and such. Try Linux Mint with flatpaks or snaps to get more fresh apps though on a stable base.

Ed

Aditya I wanted to move from Mint to Fedora, specifically Ubuntu Studio 24 hoping to be able to better run things like OBS & Davinci Resolve and have good audio.

I’m not a coder and never will be but very happily got permanently away from MS Windows.

Will Ubuntu Studio 24 be a good choice for me?

It even has many things like OBS installed but so far not Davinci Resolve but I do have that running on Mint and only had to add 3 dependencies.

Chevek

Hi, this is a codec issue, because Fedora wont distribute patented codecs, among other things.

I’ve made a script which will fix this and much more :

https://codeberg.org/Chevek/Fedora-CCG/src/branch/main/README-English.md

Best regards

huscape

You recommend installing XWayland Video Bridge. When using the Gnome Desktop I can only strongly advise against it, see:

https://discussion.fedoraproject.org/t/after-upgrading-f39-to-f40-a-white-rectangle-in-the-top-left-corner-of-the-desktop-appers-xwaylandvideobridge/114764

https://bugzilla.redhat.com/show_bug.cgi?id=2277867

mediaklan

I would have also love to read about how to get audio over a LAN for VMs (not sure how to define that in english, sorry) but this article is already plenty clear and useful as it is. Thank you !

Steven

Pipewire is problematic (iirc) for home theater use when connecting a PC to an AVR, for some reason it constantly resyncs or resets the connection and will cause popping from audio and black screens for the video side of the HDMI connection from the PC to the AVR.

Everytime I rebuild an HTPC for this use, it always bites me, and has to be reconfigured (I’m dealing with this now, and the article reminded me that sometime in the past I would fall back to older solutions because they were more stable and consistent).

Great article though, really appreciate the depth and delivery! 🙂

RG

Hi Steven, unfortunately I do not own an AVR and I am still discovering pipewire, it seems like a large rabbit hole. Try to install the package ladspa-swh-plugins . This package contains the filters mentioned in the “filter-chain” link I put above. This includes filters to create dolby surround sink/source nodes etc….. Try to redo the filter tutorial with sink-dolby-surround.conf . This will allow you to switch your sound output in the Gnome right upper corner to dolby surround. I would also inspect with qpwgraph to make sure the node ports are properly paired. But I am just guessing here. Even so, the dolby surround filter was a better improvement for me even for my stereo headset….

crd

Excellent article! Thank you for taking the time to write it. This is precisely the kind of treatment that a complex subsystem like Pipewire deserves.

Hammerhead Corvette

I liked the use of the examples here ! “As a small example, let’s try to create an audiobook” Using ffmpeg, pw-play, pocketsphinx something I knew nothing about.

RG

A much more experienced linux user told me about pocketsphinx a few years back in Germany. It is a pretty old project/tech but lightweight and it was considered a standard in its field. Glad I had the chance to use it, though there are more performant flatpak apps nowadays. Thank you for the encouragement.

Darvond

That’s great, but it’s also a lot of inside baseball. I know that all code isn’t made to be user serviceable, but tuning a multimedia/audio server shouldn’t look this complicated.

Speaking of, where’s the pwvucontrol, Sonusmix, coppwr or other graphical utilities in Fedora to make this less about plugging away at systemd modules?

RG

All great apps, I am sure. Maybe in another article. For now we talked more in the spirit of “everything is a file”. The 2 mentioned apps are also recommended by WT in last interviews on FM. I suppose pipewire api also changed and all these GUIs need to keep up eventually…

Melvin D

Great article! I’ve been trying to figure out for a few days now how to set a default quantum buffer size for pipewire because my pc kept having sound errors while playing games on steam. I would love a gui option to configure pipewire in the future, but this helped me understand how it works.

RG

I am still going around in the dark on this subject. For example regardless how you start firefox, youtube will have a buffer of 3600, while the same firefox on soundcloud will have a buffer of 900, so it seems like the client node decides what buffer to use depending on the context, operating, of course, within the quantum/rate limits from pipewire.conf

I think it is important to understand the pw-top man page driver-follower context. To make a few deterministic tests just use “PIPEWIRE_LATENCY=128/48000 pw-play test.mp3”. This will set the correct buffer property. However this env variable applies to pipewire clients(pw-play)… pulseaudio compatible clients(paplay, flatpaks) use a different environment variable. Using dedicated equipemnt might require a different approach. I hope you will manage to find out the proper set of settings for your use case… most probably it will be a combination of graph-wide changes in pipewire.conf and also client node latency requests via environment variables / node.latency property.

Melvin D

Thre steps I took to resolve steam games using a buffer size that was too low, I copied the pipewire folder from /usr/share to /etc and made my own changes. It appears the setting that I needed to change was under context properties in pipewire.conf and it was the default.clock.quantum and default.clock.min-quantum settings. I created a conf file in the pipewire.conf.d folder and named it pipewire_modifications.conf(I left these settings commented out in the pipewire.conf file), and made put these settings in there:

#

context.properties = {

default.clock.min-quantum = 1024

}

Next time I ran the game the alsa buffer (which I monitored with pw-top) never went below 1024. I also tested with max-quantum settings and it did the same for me.

huscape

For those of you who want the most perfect music reproduction possible with the lowest possible latencies, switch off the pipewire method here and use audio directly via ALSA without any detours:

→ disable pulseaudio and pipewire:

mkdirhier ~/.config/systemd/user/

systemctl –user mask pulseaudio.socket

systemctl –user mask pipewire.socket

systemctl –user mask pipewire-pulse.socket

systemctl –user mask pulseaudio.service

systemctl –user mask pipewire.service

systemctl –user mask pipewire-pulse.service

← disable pulseaudio and pipewire:

Enable ALSA-output for Firefox via apulse proxy:

→ Enable ALSA-output for Firefox via apulse proxy:

git clone https://github.com/i-rinat/apulse

cd apulse

mkdir build && cd build

cmake -DCMAKE_INSTALL_PREFIX=/usr -DCMAKE_BUILD_TYPE=Release ..

make

sudo make install

← Enable ALSA-output for Firefox via apulse proxy:

→ ~audio/bin/apulse-firefox:

#!/bin/sh

APULSEPATH=”/usr/lib/apulse”

export LD_LIBRARY_PATH=$APULSEPATH${LD_LIBRARY_PATH:+:$LD_LIBRARY_PATH}

nice -n -19 /usr/bin/firefox $*

← ~audio/bin/apulse-firefox:

Paul Davis

Pipewire has no particular impact on latency compared with using ALSA directly. It can precisely match what ALSA can do if you want, or use more latency, if you want.

You will gain almost nothing by dropping Pipewire in the way that you’ve suggested here.

In addition, Pipewire supports the protocol developed between PulseAudio and pro-audio applications, so that when the latter start up, Pipewire can get out of the way and they can have “bare metal, exclusive” access to the device. This works without disabling Pipewire itself.

huscape

Dear Paul,

It would be very nice if you would describe here how to get pipewire to behave transparently and to pass the data directly to the ALSA stack of Linux without pipewire itself intervening. Kind regards, hu

huscape

Dear Paul,

it’s a shame you didn’t find time to answer my question. Here are my reasons for not using pipewire.

Based on a Linux system that is intended to be used exclusively for playback of high-resolution audio data.

Configuring a Linux system dedicated to high-resolution audio playback to use ALSA directly, bypassing pipewire, offers several advantages:

Minimal processing overhead – By eliminating intermediate audio servers, the system avoids unnecessary resampling, mixing, or latency, ensuring that audio data reaches the hardware with minimal alteration.

Bit-Perfect playback – When properly configured, ALSA can deliver an unaltered audio stream to the DAC, preserving the original resolution and sample rate of high-definition audio files.

Exclusive hardware access – Direct communication with ALSA allows the player to take full control of the audio interface, preventing system-wide mixing and ensuring that no background processes interfere with playback.

Lower latency – Since there are no additional layers handling audio processing, playback latency is reduced, which can be beneficial for real-time audio applications (Jitter is minimized and will be minimized more with a cascade of Mutec MC-3’s (3 MC-3’s in cascade) between the Audio-PC and the DAC).

Fine-tuned configuration – ALSA provides extensive configuration options, enabling precise control over buffer sizes, sample rates, and output formats to match the capabilities of high-end audio hardware.

This approach ensures that the system remains focused solely on high-quality audio reproduction, making it an excellent choice for audiophiles and professional use cases.

With best regards, hu

Paul Davis

I answered your question, I guess it is in a moderation queue.

Nothing that you’ve said is wrong, but I also find it a bit tangential.

Sure, if you want to be build a linux system who only audio functionality is single-application playback, then either (a) do not install pipewire OR (b) use pavucontrol or pipewire’s own config files to disable the use of the audio interface you want to “reserve” for direct audio playback (I used to do this back before I started using pipewire).

At that point, your audio interface will be available for use by anything using ALSA directly (or indirectly), with no involvement from pipewire.

However, you’re incorrect about the latency benefits being somehow tied to using ALSA directly. Whether a given audio API adds latency depends entirely on the design of the audio API. JACK, for example, adds no latency at all. More importantly, if you’re really just listening to music, playback latency is completely irrelevant. Jitter is also completely unrelated to latency.

Pipewire can also be used for bit-perfect playback, just FYI. But it sounds as if in your actual or imagined use case, pipewire plays no useful role, so as I said, either do not install it or disable it’s use of a particular audio interface.

huscape

Dear Paul,

first of all, thank you for your willingness to discuss. I suspect that our assessments are not that far apart. What your statement about “More importantly, if you’re really just listening to music, playback latency is completely irrelevant. Jitter is also completely unrelated to latency.” concerns however, I disagree.

In a professional audio playback system, latency and jitter are closely related but distinct factors that affect audio quality and synchronization.

Latency

Latency refers to the time delay between an audio signal being input into the system and its output. In digital audio systems, latency is primarily influenced by factors such as:

Processing power: Higher computational demands (e.g., DSP effects, sample rate conversion) add to latency.

- Audio interface and drivers: The efficiency of drivers (e.g., ASIO, Core Audio) impacts the overall delay.

Jitter

Jitter is a form of time-based distortion caused by irregular timing variations in digital audio signal transmission. It occurs when clock signals in digital-to-analog conversion (DAC) or digital transmission (e.g., SPDIF, AES/EBU) are unstable. Jitter can lead to:

Reduced audio fidelity: Loss of clarity, imaging, and transient accuracy.

Latency and jitter ARE connected

While latency is a constant delay, jitter refers to variations in timing. They are interconnected in several ways:

- Clock stability: In professional audio systems, precise clocking reduces jitter, improving playback accuracy without necessarily impacting latency (That's why I connected the cascaded Mutec MC-3's and the DAC to a common 10 MHz reference clock generator (Mutec REF10).).

For optimal performance in professional audio playback, a balance between low latency and minimal jitter is required. High-end audio systems use low-latency drivers, stable clocking mechanisms, and optimized buffering to achieve high-quality audio with minimal timing errors.

Kind regards, hu

PS: it’s an actual usecase.

Paul Davis

I’ve been a full time audio software developer for at least 20 years. I do not need a lecture/lesson on what latency or jitter are. They are not connected. Jitter is caused by the sample/word clock. Latency is caused by buffering.

Thanks, and good night.

Xyz

Two questions, for anyone knowledgeable:

Since piperwire introduced to Fedora, I’m unable to permanently setup the level for output audio. I understood that alsamixer should be used, and I do that, but the level just get back to the same default whenever I restart machine. I tried with “alsactl store”, but to no avail. Any suggestion here?

Since a recent update in Firefox, it just reports that there is no camera. Camera work perfectly fine with Chrome, if I do “ffplay /dev/video0”, or in any other program, just Firefox is the problem. Is there any way I can check what’s going on through pipewire?

Overall – this is one of I think already half dozen times that I read articles about pipewire, and I don’t get it. These articles always have to go in depth with the architecture etc. – I kind of understand its purpose, and that it’s very powerful, but for an ordinary Joe as myself that just want to do some video-conferencing from time to time, I still don’t understand why there aren’t some simple tools like mixer etc. to control it in the most basic manner, without having to go through all these details in order to troubleshoot eventual issues.

Xyz

Ok – writing this comment prompted me to dig into this once again, and I was able to solve the first problem. Apparently, at least on Fedora restoring the state of sound controls could be controlled either by alsa-state or alsa-restore services. However, the “alsactl store”-d state seems to be restored only by the second service; alas, the first one is the default on Fedora, so whatever stored by “alsactl store” got ignored. The “best” part: to select which service is to be run upon startup, the existence of /etc/alsa/state-daemon.conf file is checked: if it exists then alsa-state service will be started, and if it does not then the alsa-restore service will be started.

Now, apparently this has not much to do with ALSA and certainly not with pipewire – it is all due to systemd madness, and also probably by Fedora misconfiguration. But it’s still plain stupid that an end user has to learn about all this crap just to have sound levels saved across reboots.

In any case, if anyone has a suggestion about camera and Firefox, I’d much appreciate.

RG

Hi Xyz, I did some tests on fedora 41 and 42-beta and I could not reproduce this issue. I can say that whenever you change the volume in gnome-control-center, this specific service will interact, probably due to historical reasons, with pipewire-pulse socket. If you have experimented with some other original pulse servers that have overwritten that socket, this could be one cause for such problems. Normally the job of restoring everything related to session is the role of the session manager – wireplumber, and I am still reading and experimenting with it. For now I can hope you will manage to solve your sound problems. As for firefox… try to go to the address bar “about:config” then in the new page, find the pipewire keys and toggle the value. Sorry I could not be of help.

Xyz

Thanks for your reply, and for your excellent article. It was not my intention to detract from the article quality, nor I was expecting you/someone to solve the issue that I have. I’m sorry that I vented my frustration here. I’ve spent some additional time on the sound level issues. It seems I was wrong and that restoring the sound levels works properly either way, but that applications, and Firefox in particular, are changing levels at will. Actually, it seems that sites like YouTube are doing it, there are various discussions on these topics over the net. One may indeed have to dig deeper into Pipewire to actually find how to disable such behaviour.

Josh

I’m sure this info is available with a Google search, but I’m asking it here for the benefit of others too:

So if I’m a linux musician, do I still need Jack/Qjackctl/etc/ for running audio applications like Ardour?

Paul Davis

Pipewire implements the JACK API and can act as a JACK server.

I am the original author of JACK and one of the two main devs of Ardour. I’ve been using just Pipewire now for about 6-7 months. It’s fine.

But also, you do not need JACK to use Ardour, and for most users, Ardour’s ALSA backend is a better choice. JACK (whether “actual” JACK, or the Pipewire implementation) is only useful if you need/want to (a) route audio/MIDI between applications (b) share the audio interface among multiple applications.

rugk

Yeah good, but it is missing a permission system, especially for audio input aka recording(!) sound from your microphone.

This is a very sensitive privacy matter, and also poses a technical challenge for flatpak’s now, as they cannot differentiate between audio output and input, so all apps that may play audio can also record your microphone.

This is obviously not at all desirable.

See https://github.com/flatpak/xdg-desktop-portal/discussions/1142 and https://github.com/flatpak/flatpak/issues/6082