Introduction

Using Fedora and Linux to produce and play music is now easy. Not that long ago, it was a nightmare: configuration was a complicated task and you needed to compile some applications yourself. The compatibility with electronic devices was the real story. But, now we can see the end of the road. Playing music under Linux with Fedora is becoming user friendly.

Configuration

Fedora has long been usable to play music because of the CCRMA repository. Moreover, there also exists a Fedora Spin dedicated version: Fedora Jam. And today, you also have a COPR repository (which I manage) with a lot of stuff in it.

To install the Fedora CCRMA repository:

rpm -Uvh http://ccrma.stanford.edu/planetccrma/mirror/fedora/linux/planetccrma/$(rpm -E %fedora)/x86_64/planetccrma-repo-1.1-3.fc$(rpm -E %fedora).ccrma.noarch.rpm dnf install https://download1.rpmfusion.org/free/fedora/rpmfusion-free-release-$(rpm -E %fedora).noarch.rpm dnf install https://download1.rpmfusion.org/nonfree/fedora/rpmfusion-nonfree-release-$(rpm -E %fedora).noarch.rpm

To install the LinuxMAO Fedora COPR repository:

dnf copr enable ycollet/linuxmao

There are still some minimal steps to follow before being able to efficiently use a musical application. First, you will need to install the Jack audio connection kit and the qjackctl user interface:

dnf install jack-audio-connection-kit qjackctl

Then, as a root user, you will need to add yourself to the jackuser group:

sudo usermod -a -G jackuser <my_user_id>

To enable the changes made, you just have to logout of and log back in to your session or if you prefer reboot your machine.

Using basic applications

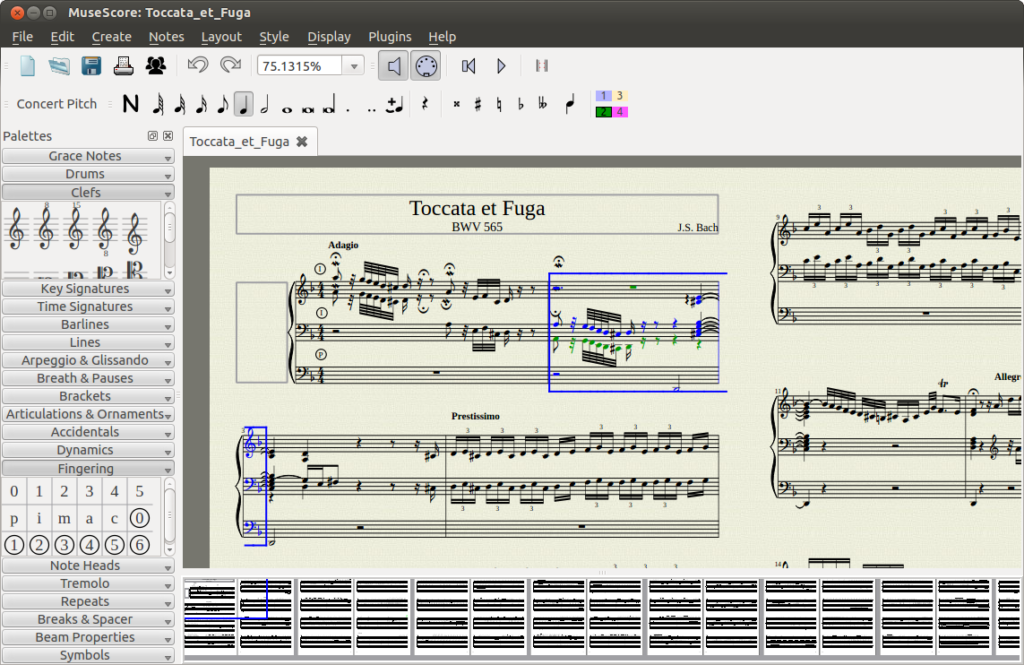

Now, you can add some applications to play with like LMMS or MuseScore.

You can also record your voice using Audacity.

All of these applications are available in the main Fedora repository:

dnf install lmms mscore audacity

Fedora and your instrument, in real time

Configuration

Editors note: A real time Kernel is necessary for audio recording on your PC, especially when doing multi track recording.

If you want to use your instrument (like an electric guitar) and use the sound of your instrument in some Fedora application, you will need to use Jack Audio Connection Kit with a real time kernel.

With the CCRMA repository, to install the real time kernel, use the following command as a root user:

dnf install kernel-rt

With the LinuxMAO Fedora COPR repository, use the following command:

dnf install kernel-rt-mao

The RT Kernel from CCRMA repository corresponds to a vanilla RT kernel with some Fedora patches applied whereas the one from the LinuxMAO repository is a pure vanilla one (a clean RT kernel without any patches).

Once this is done, we still need to perform some tuning on qjackctl to reduce the audio latency so it is negligible.

Click on the “Setup” button and set the following values:

- Sample rate: 48000 or 44100 (this is the sampling frequency and these values are mostly supported on all commercially available sound cards)

- Frames / period: 256

- Periods / Buffer: 2

- MIDI driver: seq (this value is required is you want to use a MIDI device)

With these parameters, you can easily achieve an audio latency of around 10 ms. While this value is the limit for the human ear and is hardly noticeable, you can reach lower latency with the penalty of increased CPU load.

Using Guitarix

To add some effects to your instrument, we will use a rack of effects: guitarix (edit: guitarix, the virtual guitar amplifier).

dnf install guitarix

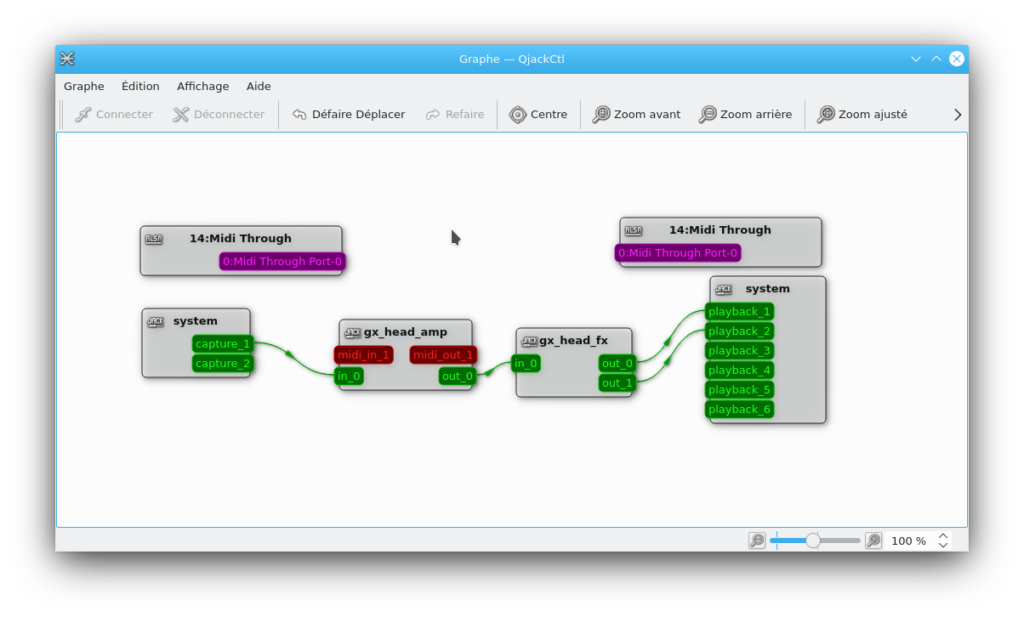

Now, you have to connect your instrument to the audio card (the internal one or a USB adapter). Editors note: This normally requires an interface between the electric guitar and the audio line in of the audio card. There are also guitar to USB adapters. Once your instrument is connected, with qjackctl, we will connect:

- the audio input to guitarix

- the guitarix mono rack to the guitarix stereo rack

- the guitarix stereo rack to the stereo audio output of your audio card

You do that by clicking on the Graph button of QJackCtl. Inside the Graph window, you just have to connect wires to the various elements . Each block represents an application. Guitarix is split into two blocks (preamp and rack). The preamp is where you select the amplifier characteristics, and the rack is where you apply mono and stereo effects. There are two other blocks with the system label for the audio input (the one on the left in the above figure) and the audio outputs (the one on the right).

Your instrument should be connected to the first audio input. You should test that your guitar is connected and that it’s able to be heard when played. Most of the time, we use the first two slots of audio output. But this will depend on your audio card.

Editors note: The actual configuration of inputs and outputs depends upon the type of hardware chosen. The stereo speakers of the PC were chosen as the output in the example shown.

If the MIDI interface of the sound card is chosen, there are also two red blocks which are dedicated to MIDI inputs / outputs. These would then be setup as the input from the instrument and the output from the rack.

Guitarix is an amp plus a rack of effects for you instrument. Mostly dedicated to guitar, but you can uses it with synthesizers too.

Adding some backing tracks

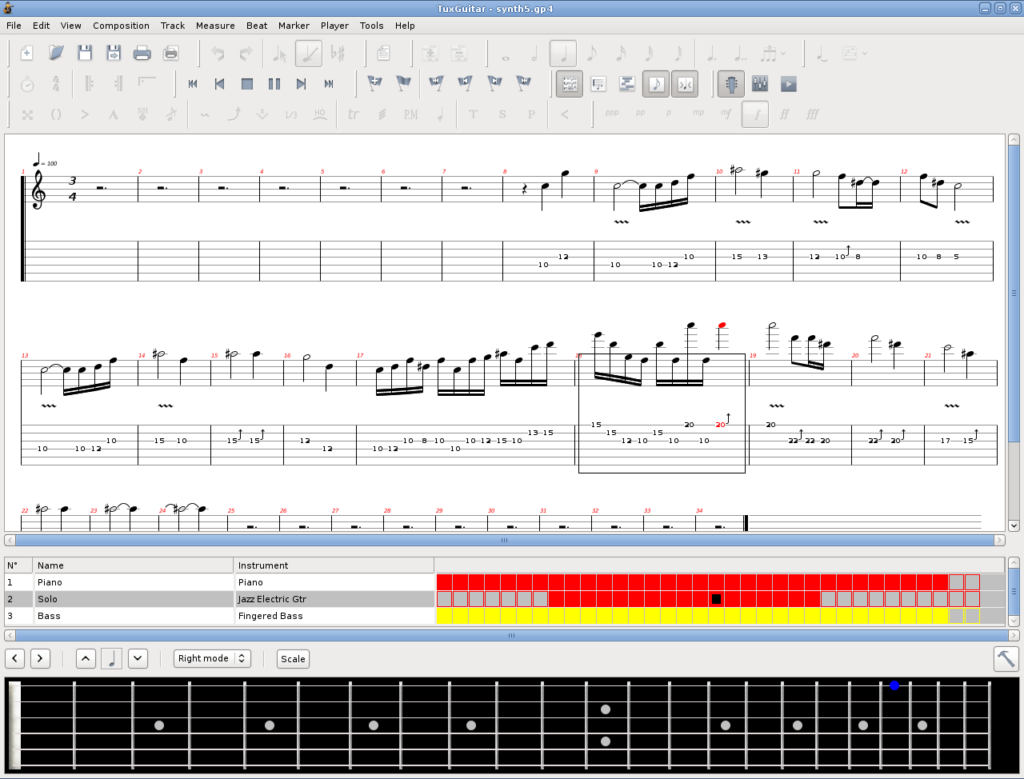

Better than just playing guitar on your own, you can play guitar with a group. To do this, we will install TuxGuitar.

dnf install tuxguitar

TuxGuitar will play GuitarPro files. These files contains several instruments scores and can be played in real time. You just have to download a GuitarPro file from this website and open it with TuxGuitar.

Start TuxGuitar and click on Tools -> Plugins and check the fluidsynth plugin. Then, once fluidsynth is checked, click on Configure. Click on the Audio tab and select Jack as Audio Driver. In the Synthetizer tab, choose the same sampling frequency you chose for QjackCtl above (48000 or 44100 Hz).

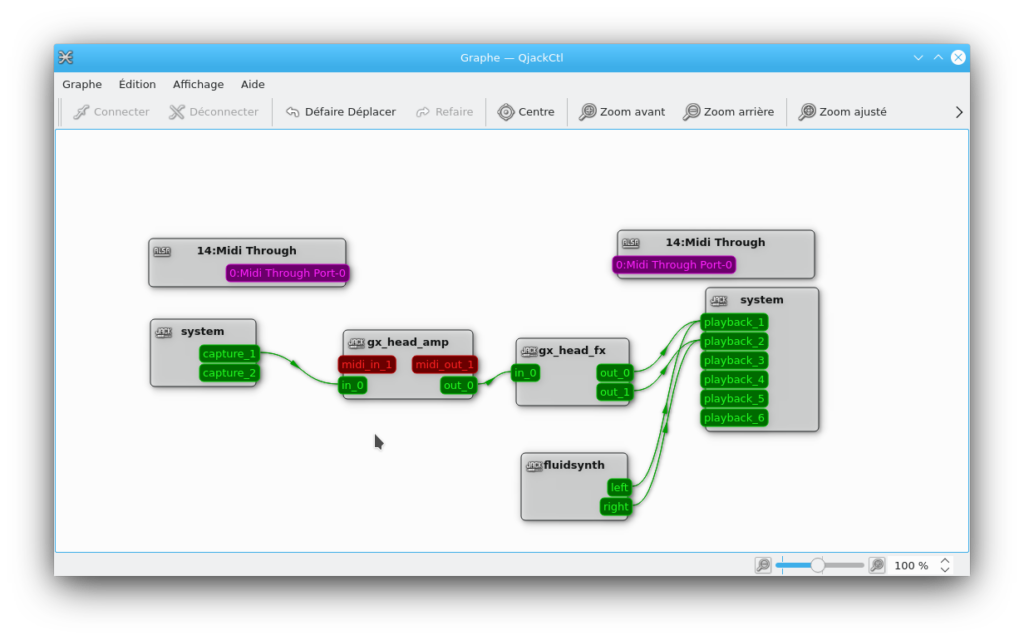

In the soundfonts tab, you can add your own SF2 or SF3 file to improve the audio rendering. You can now close the Plugins window. Click on Tools -> Settings -> Sound. Here, you can select the king of audio used to render the score. If you have several SF2 / SF3 files, you will select the chosen one for the audio rendering here. Restart TuxGuitar after you’re satisfied with your selections. After restarting TuxGuitar, a new block will appear in the Graph window of QJackCtl.

You will just have to connect the block tagged ‘fluidsynth’ to the audio output like you have done with Guitarix.

Using MIDI devices

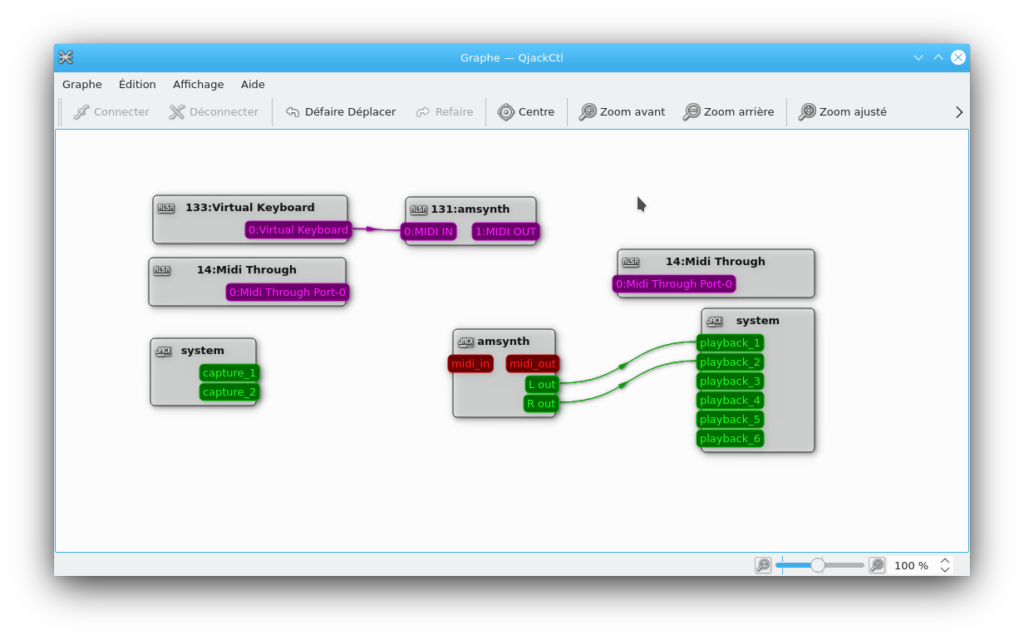

Using MIDI devices in real time is as easy as with audio. We will connect a virtual MIDI keyboard: vkeybd (but the same procedure applies with a real MIDI device) to a MIDI synthetizer: amsynth.

dnf install amsynth vkeybd

Once you have started amsynth and vkeybd, you will see new connections on the QJackCtl’s Graph window.

In this window, the red slots correspond to the Jack Audio MIDI connections whereas the purple ones correspond to the ALSA MIDI connections. Jack MIDI connections talk only to Jack MIDI connections. And the same for ALSA. If you want to connect a Jack MIDI connection to an ALSA MIDI connection, you will need to use a MIDI gateway: a2jmidid. You can read some more informations in the Ardour manual.

We have now covered some main topics of the audio under Fedora Linux. But there are a lot more things you can do.

Other possibilities

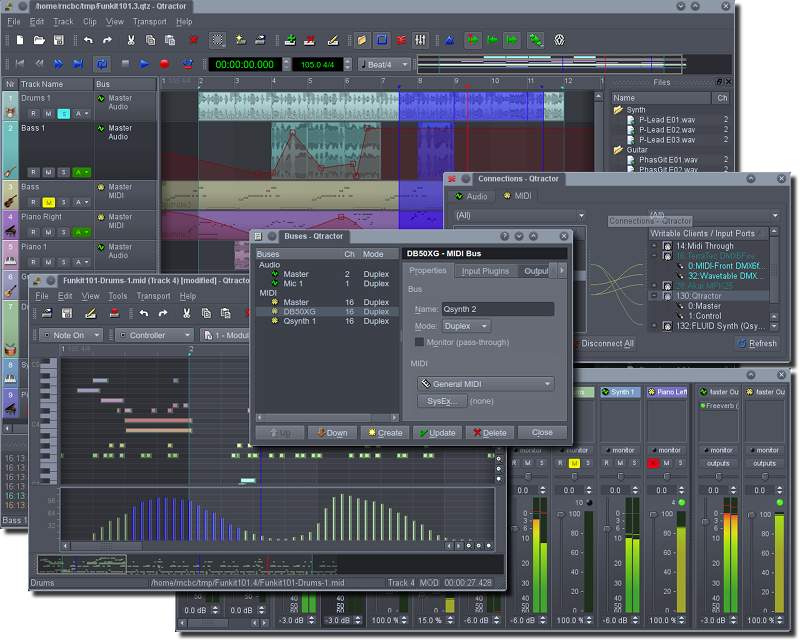

You can do multitrack recording with ardour, qtractor or zrythm.

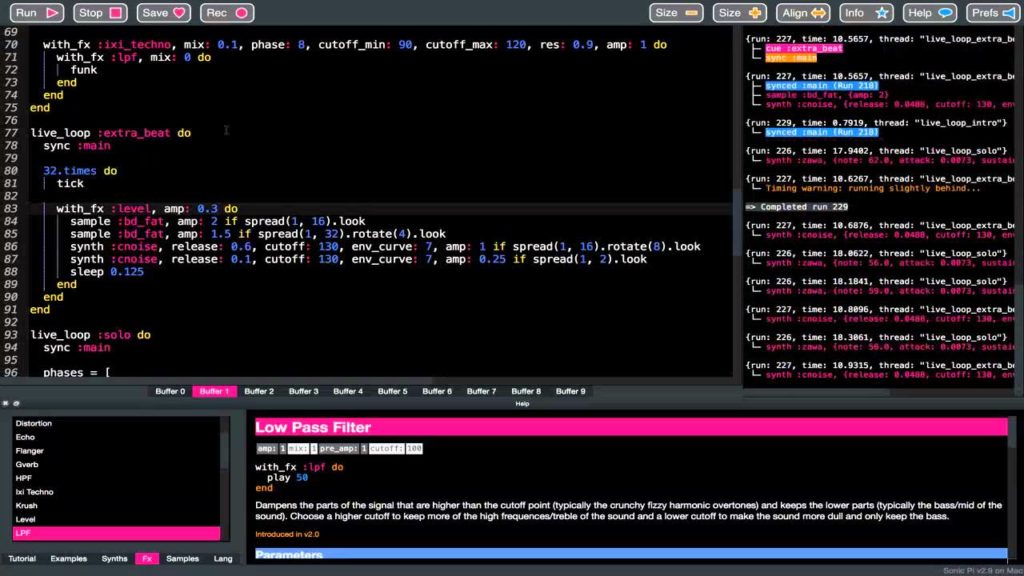

You can do live coding using SuperCollider or SonicPi.

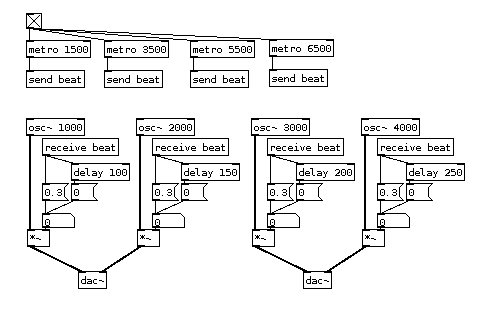

Use some block connected language to perform many things: PureData

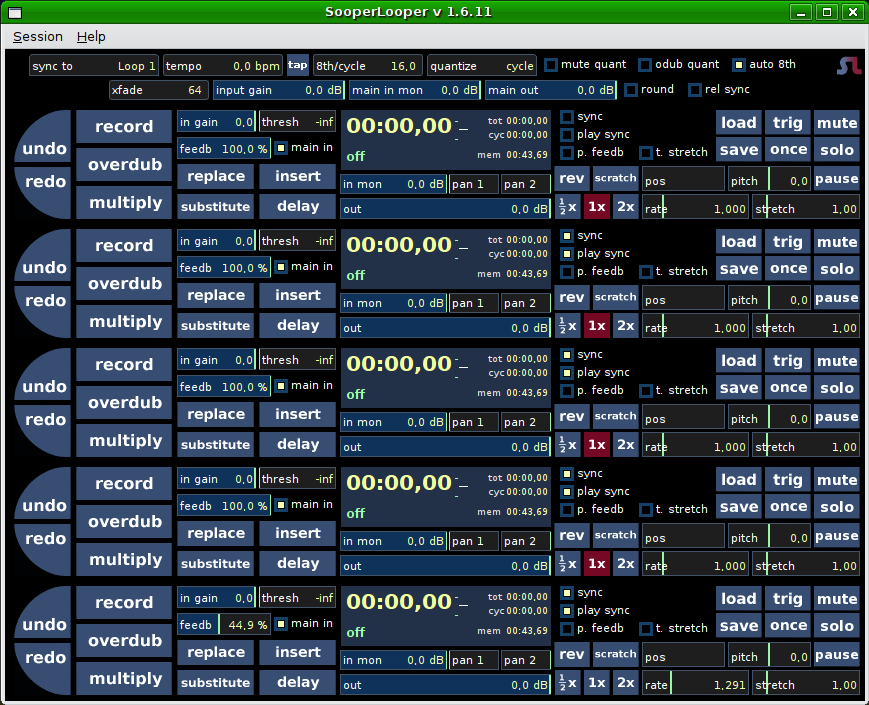

There is also a great audio looper available: SooperLooper.

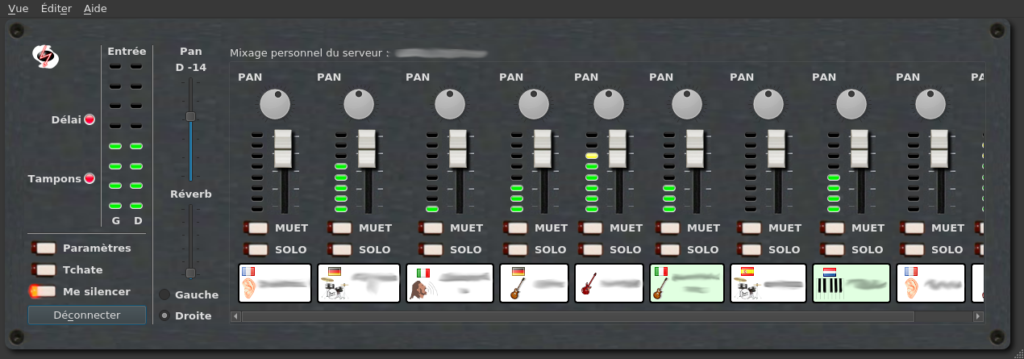

You can do live rehearsal through the internet: Jamulus.

Want to become the new famous DJ: have a look at Mixxx

Webography

Some links now:

Here is a YouTube video where I play guitar through Guitarix and I use TuxGuitar to play the backing tracks in real time. Both TuxGuitar and Guitarix are sent through non-mixer which is a small mixing application. To be able to record the audio of the session “on the fly”, I also use timemachine. And to avoid reconnecting everything each time I want to play guitar, I use Ray Session to start every application and connect all the Jack Audio connections.

I also made a small demonstration of the use of Jamulus for a live rehearsal. On this YouTube video, I use Jamulus, QJackCtl, Guitarix mainly. The second guitarix is 30 km away. The latency was around 15 ms. It’s quite small and hardly noticable.

On this YouTube video, I tried to make some comparison between various SF2 / SF3 soundfont files. I used a GuitarPro file for the Opeth’s song “epilogue”.

On this YouTube video, I use MuseScore to play a GuitarPro file and I play along while my guitar sound is processed by Guitarix.

Here, it’s a live performance with a dancer. TuxGuitar + Non Session Manager + Non Mixer + Guitarix. I always used this kind of combination and Linux has never hanged … Finger crossed !

Some compositions made with LMMS on Fedora 25 to 32. Using some really nice plugins like Surge, NoiseMaker from DISTRHO package and others. All these compositions are libre music and are hosted on Jamendo.

If you need some help:

- LinuxMusicians: a great place with skilled people willing to help

- LinuxMAO: if you speak french, this is the place to be. A lot of resources related to various software.

- LinuxAudio: another great website with various ressources to help.

Bill Chatfield

This is a fantastic article. Thank you so much. I’m wondering why the real-time kernel is not distributed by default. It seems silly that it is not. If people try recording audio with a default kernel, which is what people expect because that is how it is done with Windows and Mac, they’re going to conclude that Linux sucks. This situation is really bad for Linux. Even if you know you need to install a real-time kernel, that is a lot of extra (unnecessary) work that you don’t have to do with other platforms. This all just makes Linux look bad.

Stephen Snow

You absolutely can record music from an instrument via the normal as shipped kernel, however if you want to reliably use MIDI say, you are going to need a RT-Kernel at some point since those devices are time domain dependent. Windows and Mac are not RT OOTB AFAIK.

Venn Stone

It might be a good idea to let everyone know installing a RT kernel will knacker their Nvidia, Blackmagic, and Mellanox drivers.

That’s why I use one compiled with Preemptible Kernel (Low-Latency Desktop) for the streaming box in the studio. It happily handles 12/15 channels of audio during a live show.

Stephen Snow

Hello Venn, that is a good point. Using a RT kernel is not absolutely necessary to be able to use the software noted in the article. Kernel changes outside of the shipped kernel and updates, will have an effect on system and sometimes not in a positive way.

Matthieu Huin

Interesting, could you elaborate? I’ve wanted to look into music with Fedora for a while, but I also want to benefit from my nvidia card for CUDA related stuff.

james

The nvidia proprietary driver does not compile against a realtime kernel, and all attempts at a hack have failed. It has been this way for years, unfortunately, but you can use the opensource driver with the realtime kernel.

From memory, you can disable the proprietary driver and enable the opensource driver on the kernel command line, but there is bound to be more to it than that. I just elected to use the opensource driver as I didn’t need the greater power.

Stephen Snow

A good article. I have a builtin sound device on my motherboard which provides a Line-in audio input. I have two guitar amplifiers that can provide a line-out audio signal in dual-mono, and a Tascam CD-GT1 which provides a dual-mono output at line level as well. I have recorded the guitar (without installing a RT-Kernel), and shared the recording with someone who was using a Mac to record a track onto it. We passed this file back and forth layering tracks onto it, me with Fedora and him with Macbook pro. My point is neither of us noticed timing issues during this exercise.

Anthony Stauss

Many thanks for this, and links to actual use videos!

Oleksiy Lukin

I may suggest to install about QSynth+fluidsynth? I use it with MIDI keyboard.

Oleksii Lukin

Sorry, first it was a question and there’s no possibility to edit.

Yann

I use QSynth from time to time to perform multitrack recording on qtractor.

I start tuxguitar as a MIDI sequencer and then, I connect tuxguitar MIDI output track by track to several QSynth (one by instrument). And then, I connect the qsynth audio output to qtractor for multitrack recording.

Here, you have the tuxguitar audio rendering using several SF2 soundfonts:

https://www.youtube.com/watch?v=p7fFXbFxLwI

nphilipp

Thanks for the article!

I have to disagree on one point, however: most people won’t need a realtime kernel for audio production. The mainline kernel as included in Fedora has been able to do realtime scheduling for a long time, and it’s been absolutely adequate for anything I could throw at it since I’ve switched back from using PlanetCCRMA’s kernel-rt package years ago. This means, on an aged hand-me-down computer, multi-track recording with up to 24 channels (limit of my audio HW), occasionally beefing up a miced instrument with sideband reverb in monitoring, even monitoring ITB with Ardour (though that’s admittedly and noticeably pushing it, at least for percussive sources, it would be something I’d consider using kernel-rt again for).

One question: what obstacles are there to having these packages in the official Fedora repos? They’d be much easier to discover, I only found your COPR repo when googling for some synth I found on linuxsynths.com…

Yann

Most of the work to put these packages in the official repo ?

– first becoming an official RPM packager.

Once this is done, some of these packages will be “easy” to put in the official repo. Some others, almost impossible: the real time kernel for example. There are always security issues an other things like that.

And for becoming an official packager, this is a work in progress on mys side 🙂

bhavin192

Thank you for writing this, I might need some of this to connect my guitar 😉

Interested Party

What are recommended hardware setups for high quality electronic guitar music production?

Yann Collette

The only thing I recommend to record a guitar: a USB sound card with a preamplifier.

Having a preamplifier on the sound card is a huge plus on the sound of the guitar.

A good first card can be: Focusrite – Scarlett 2i2

If you are a professionnal, you can have a look at RME Fireface Ucx Interface Audio Desktop USB. Not the same price 🙂

Interested Party

Thanks. What about obtaining midi output?

Yann Collette

For MIDI: it’s quite easy.

– some audio card (like on some focusrite models) have a MIDI in / out connector

– most of the synths have an USB MIDI connector which is supported out of the box on most cases

– You can also by a USB / MIDI connector (around 15€) which will allow you to connect old synth on Linux. I use such a connector to connect my behringer fcb1010 pedal board to control guitarix.

Dave Yarwood

For those who are interested in the idea of composing music in a text format and using a command line-oriented workflow to play and iterate on your compositions, there is also Alda, a music composition programming language: https://alda.io

Full disclosure: I am the creator of Alda 🙂

james

Hi, and thanks for the article. I’m glad to hear fedora jam is up and running, and that the planet ccrma still works. Both sort of disappeared for a while.

Do you know if falktx’s python apps cadence or claudia etc are available for fedora?

Pieter van Veen

James: cadence is available in the regular Fedora repo and AFAICT claudia is included in the cadence package. So all you have to do (from the command line as root) is:

dnf install cadence

Yann Collette

Carla and cadence are available on Fedora 32. I don’t know for Fedora 30 and 31 …

Bruno

Finding a efficient workflow is not easy, there are many choices, but applications are improving. And there are some good motivation: https://www.youtube.com/c/unfa000/

As for the packaging, I admit I ended up using the AppImage directly from MuseScore and the install script directly from Ardour. I gave up on official Fedora packages for Audio. And I use Github directly for Helm or RaySession or ZynFusion.

Maybe in a few years Flatpak will solve this.

We’ll see.